二进制或者kubeadm搭建的k8s部署kube-prometheus解决默认告警问题

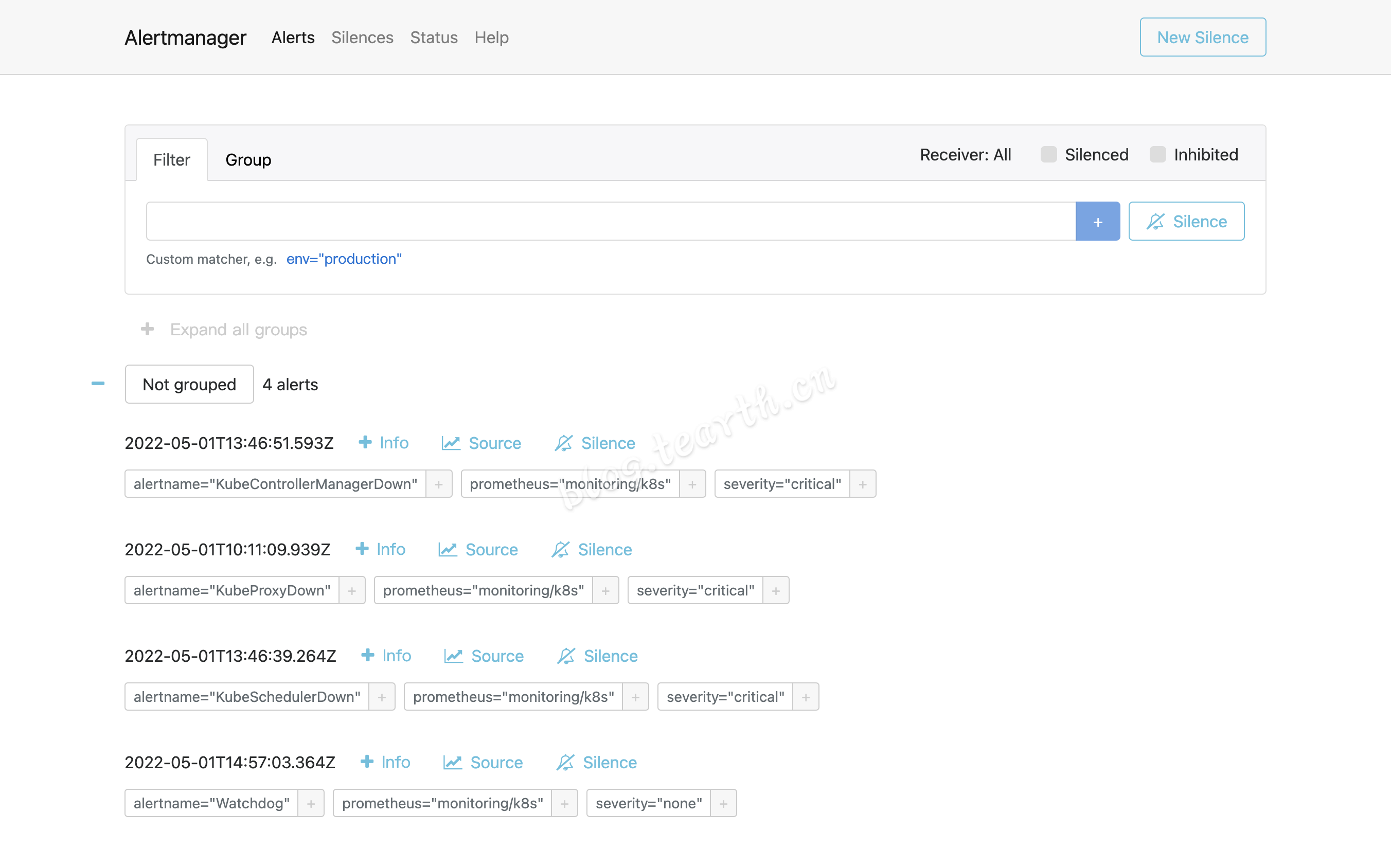

二进制或者kubeadm搭建的k8s在kube-promethesu部署完成之后,会出现KubeControllerManagerDown、KubeProxyDown、KubeSchedulerDown、Watchdog四个告警

Watchdog

查看Watchdog的告警描述:This is an alert meant to ensure that the entire alerting pipeline is functional. This alert is always firing, therefore it should always be firing in Alertmanager and always fire against a receiver. There are integrations with various notification mechanisms that send a notification when this alert is not firing. For example the "DeadMansSnitch" integration in PagerDuty.,简单来说,这个告警是为了保证告警功能可以正常使用,如果这个告警消失,那就表示告警系统出了问题。如果不需要这个功能,可以修改prometheus目录中的相关文件kubePrometheus-prometheusRule.yaml,注释或删除相关的内容。

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-prometheus

app.kubernetes.io/part-of: kube-prometheus

prometheus: k8s

role: alert-rules

name: kube-prometheus-rules

namespace: monitoring

spec:

groups:

- name: general.rules

...

# - alert: Watchdog

# annotations:

# description: |

# This is an alert meant to ensure that the entire alerting pipeline is functional.

# This alert is always firing, therefore it should always be firing in Alertmanager

# and always fire against a receiver. There are integrations with various notification

# mechanisms that send a notification when this alert is not firing. For example the

# "DeadMansSnitch" integration in PagerDuty.

# runbook_url: https://runbooks.prometheus-operator.dev/runbooks/general/watchdog

# summary: An alert that should always be firing to certify that Alertmanager

# is working properly.

# expr: vector(1)

# labels:

# severity: none

- name: node-network

...

- name: kube-prometheus-node-recording.rules

...

- name: kube-prometheus-general.rules

...

修改完成后,使用kubectl apply -f prometheus/kubePrometheus-prometheusRule.yaml更新配置,稍等片刻,就可以看到告警Watchdog已从告警列表消失。

KubeControllerManagerDown

KubeControllerManagerDown告警描述:KubeControllerManager has disappeared from Prometheus target discovery.,翻译:KubeControllerManager已经从Prometheus目标发现中消失。

原因是这个文件kubernetesControlPlane-serviceMonitorKubeControllerManager.yaml定义了一个ServiceMonitor资源对象,最后一段从名称空间kube-system中匹配包含labelapp.kubernetes.io/name: kube-controller-manager的svc

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

app.kubernetes.io/name: kube-controller-manager

app.kubernetes.io/part-of: kube-prometheus

name: kube-controller-manager

namespace: monitoring

spec:

...

jobLabel: app.kubernetes.io/name

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

app.kubernetes.io/name: kube-controller-manager

创建一个新目录other,在这个目录中新建一个yaml文件kube-system-Service.yaml,文件内容如下:

apiVersion: v1

kind: Service

metadata:

name: kube-controller-manager

namespace: kube-system

labels:

# 这里定义的标签要保证跟ServiceMonitor资源对象中selector的标签一致

app.kubernetes.io/name: kube-controller-manager

spec:

selector:

# 这里选择的标签要保证是kube-controller-manager Pod中定义的标签之一

component: kube-controller-manager

ports:

- port: 10257

targetPort: 10257

name: https-metrics

修改完成后,使用kubectl apply -f other/创建kube-controller-manager Service资源,稍等片刻,就可以看到Service已经创建成功

➜ ~ kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-controller-manager ClusterIP 10.98.64.108 <none> 10257/TCP 110s

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 11d

kubelet ClusterIP None <none> 10250/TCP,10255/TCP,4194/TCP 10d

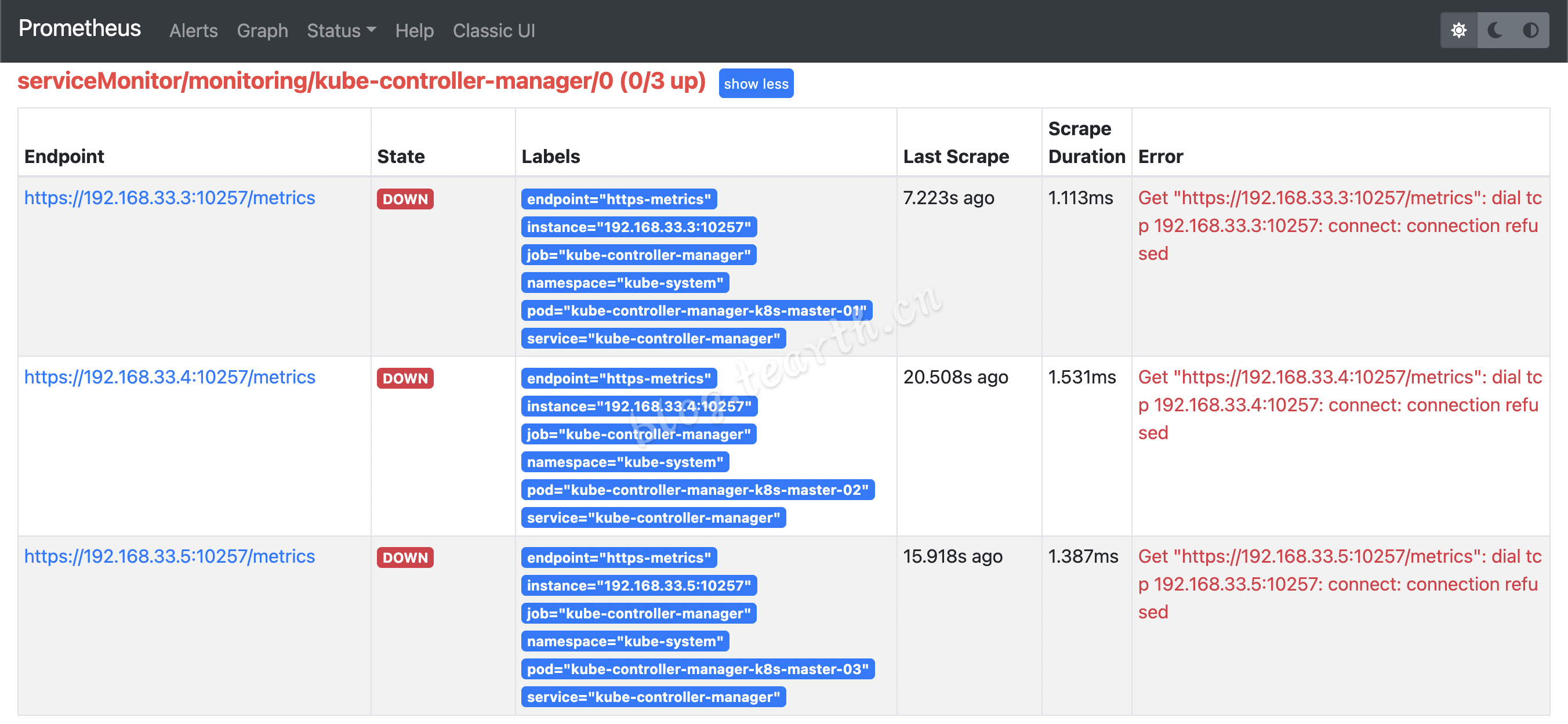

然而,告警并没有消失,但是告警内容发生了改变:PrometheusTargetMissing,这时在prometheus Web查看targets,跟kube-controller-manager相关的三个全都不在线,

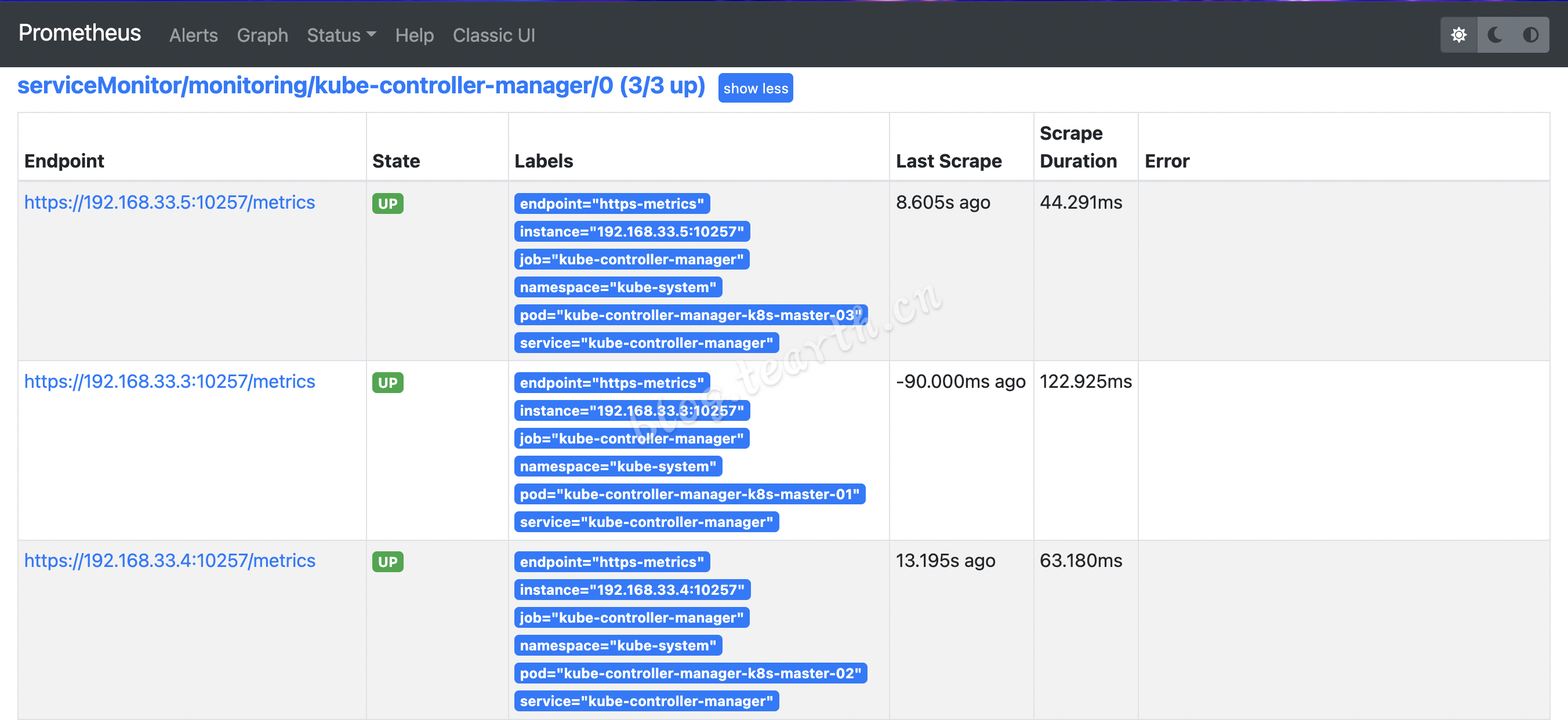

原因是kube-controller-manager默认监听地址是127.0.0.1,导致无法外部访问。在k8s集群每个master节点修改/etc/kubernetes/manifests/kube-controller-manager.yaml,将- --bind-address=127.0.0.1改为- --bind-address=0.0.0.0,保存退出,对应节点的pod会自动重启(如果没有pod没有自动重启,重启kubelet服务或手动重启)。等待pod重启完成,刷新Web,可以看到target都已在线,告警KubeControllerManagerDown已从告警列表消失。

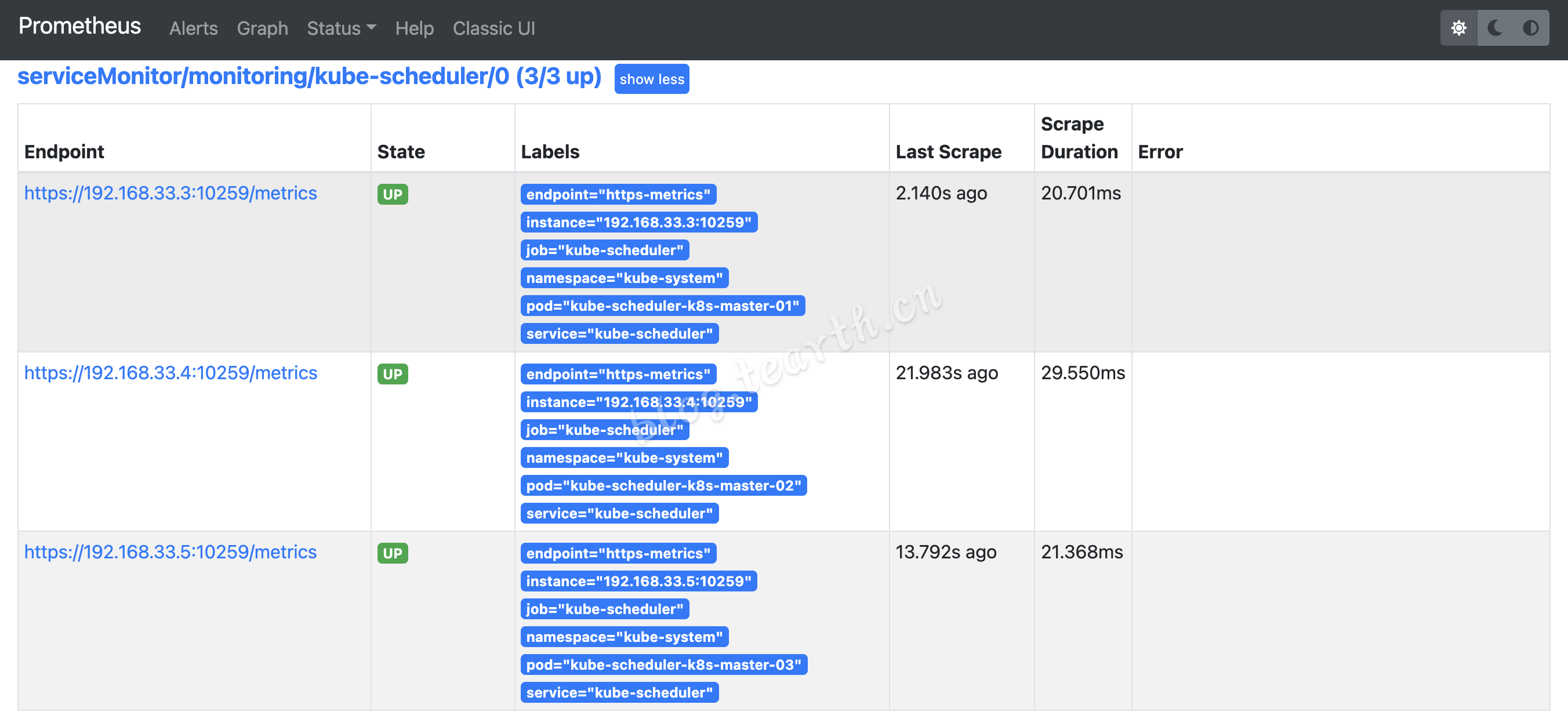

KubeSchedulerDown

KubeSchedulerDown同理,在kube-system-Service.yaml后面追加kube-scheduler Service。

apiVersion: v1

kind: Service

metadata:

name: kube-controller-manager

namespace: kube-system

labels:

app.kubernetes.io/name: kube-controller-manager

spec:

selector:

component: kube-controller-manager

ports:

- port: 10257

targetPort: 10257

name: https-metrics

---

apiVersion: v1

kind: Service

metadata:

name: kube-scheduler

namespace: kube-system

labels:

app.kubernetes.io/name: kube-scheduler

spec:

selector:

component: kube-scheduler

ports:

- port: 10259

targetPort: 10259

name: https-metrics

使用kubectl apply -f other/创建kube-scheduler Service资源。

在k8s集群每个master节点修改/etc/kubernetes/manifests/kube-scheduler.yaml,将- --bind-address=127.0.0.1改为- --bind-address=0.0.0.0,保存退出,对应节点的pod会自动重启(如果没有pod没有自动重启,重启kubelet服务或手动重启)。重启完成,刷新Web,可以看到target已在线,告警KubeSchedulerDown已从告警列表消失。

KubeProxyDown

KubeProxyDown跟KubeControllerManagerDown和KubeSchedulerDown类似,不同的是kube-prometheus并没有为kube-proxy创建一个serviceMonitor对象。

首先参考其它serviceMonitor资源对象的yaml文件在kubernetesControlPlane目录下新建一个yaml文件kubernetesControlPlane-serviceMonitorKubeProxy.yaml,定义一个名为kube-proxy的ServiceMonitor对象,文件内容如下:

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

app.kubernetes.io/name: kube-proxy

app.kubernetes.io/part-of: kube-prometheus

name: kube-proxy

namespace: monitoring

spec:

endpoints:

- bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

interval: 30s

port: http-metrics

scheme: http

tlsConfig:

insecureSkipVerify: true

jobLabel: app.kubernetes.io/name

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

app.kubernetes.io/name: kube-proxy

使用kubectl apply -f kubernetesControlPlane/kubernetesControlPlane-serviceMonitorKubeProxy.yaml创建资源。

继续在kube-system-Service.yaml后面追加kube-proxy Service。

apiVersion: v1

kind: Service

metadata:

name: kube-controller-manager

namespace: kube-system

labels:

app.kubernetes.io/name: kube-controller-manager

spec:

selector:

component: kube-controller-manager

ports:

- port: 10257

targetPort: 10257

name: https-metrics

---

apiVersion: v1

kind: Service

metadata:

name: kube-scheduler

namespace: kube-system

labels:

app.kubernetes.io/name: kube-scheduler

spec:

selector:

component: kube-scheduler

ports:

- port: 10259

targetPort: 10259

name: https-metrics

---

apiVersion: v1

kind: Service

metadata:

name: kube-proxy

namespace: kube-system

labels:

app.kubernetes.io/name: kube-proxy

spec:

selector:

k8s-app: kube-proxy

ports:

- port: 10249

targetPort: 10249

name: http-metrics

使用kubectl apply -f other/创建kube-proxy Service资源。

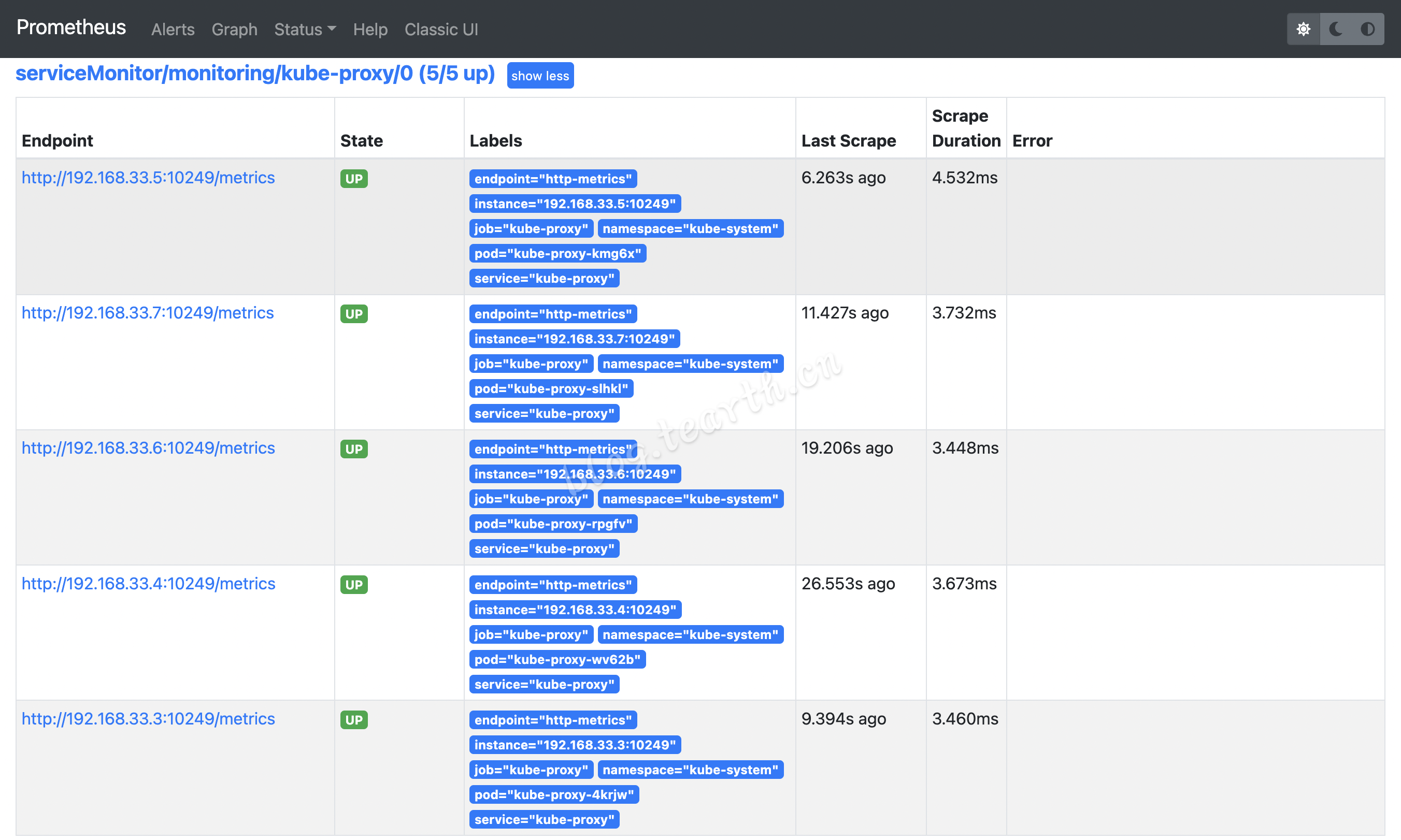

kube-proxy也需要修改监听地址,在任意一台master节点修改kube-proxy ConfigMapkubectl edit cm/kube-proxy -n kube-system将metricsBindAddress: ""改为metricsBindAddress: "0.0.0.0:10249",重启Pod。重启完成,稍等片刻,就可以在Web看到kube-proxy的target都已经在线,告警KubeProxyDown已从告警列表消失。

参考:https://blog.tearth.cn/index.php/archives/37/