RKE的方式快速部署K8S集群

使用RKE的方式部署K8S的高可用集群。RKE是一款经过CNCF认证的开源Kubernetes发行版,可以在Docker容器内运行。它通过删除大部分主机依赖项,并为部署、升级和回滚提供一个稳定的路径,从而解决了Kubernetes最常见的安装复杂性问题。

借助RKE,Kubernetes可以完全独立于正在运行的操作系统和平台,轻松实现Kubernetes的自动化运维。只要运行受支持的Docker版本,就可以通过RKE部署和运行Kubernetes

RKE官方文档说明介绍:https://www.rancher.cn/products/rke/

运行环境

机器需要满足以下几个条件:

- 一台或多台机器,操作系统CentOS7.x-86_64(个人选择CentOS7.9)

- 硬件配置:2GB或更多RAM,2个CPU或更多CPU,硬盘30GB或更多

- 集群中所有机器之间网络互通

- 可以访问外网,需要拉取镜像,如果服务器不能上网,需要提前下载镜像并导入节点

- 禁止swap分区,可参考之前二进制部署k8s的前提环境

服务器整体规划

| 名称 | IP | 组件 |

|---|---|---|

| master01 | 10.80.210.180 | controlplane、rancher、rke、kubectl |

| node01 | 10.80.210.181 | worker |

| etcd01 | 10.80.210.182 | etcd |

软件环境

| 软件 | 版本 |

|---|---|

| docker | 20.10.18 |

| 操作系统 | centos 7.9 |

初始化环境准备

所有机器执行

#根据规划设置主机名

hostnamectl set-hostname <hostname> #分别设置为 master01、node01、etcd01

hostname #确认是否配置生效

# 关闭防火墙 如果是minimal安装,默认没有装 firewalld

systemctl stop firewalld

systemctl disable firewalld

#关闭selinux

setenforce 0 && sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

#关闭swap

swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

#文件数设置

ulimit -SHn 65535

cat >> /etc/security/limits.conf <<EOF

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* seft memlock unlimited

* hard memlock unlimitedd

EOF

#在master添加hosts

cat >> /etc/hosts << EOF

10.80.210.180 master01

10.80.210.181 node01

10.80.210.182 etcd01

EOF

#将桥接的IPv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf << EOF

#开启网桥模式【重要】

net.bridge.bridge-nf-call-iptables=1

#开启网桥模式【重要】

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

# 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它

vm.swappiness=0

# 不检查物理内存是否够用

vm.overcommit_memory=1

# 开启 OOM

vm.panic_on_oom=0

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

#关闭ipv6【重要】

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

# 加载网桥过滤模块

modprobe br_netfilter

# 查看网桥过滤模块是否成功加载

lsmod | grep br_netfilter

# 重新刷新配置

sysctl -p /etc/sysctl.d/k8s.conf

#时间同步

yum install ntpdate -y

ntpdate time.windows.com

#或者配置crontab执行:

`crontab -e`

0 */1 * * * /usr/sbin/ntpdate ntp1.aliyun.com

#关闭及禁用邮件服务

systemctl stop postfix && systemctl disable postfix

安装Docker和Docker-compose

步骤忽略:参考之前自己的部署笔记,–>https://199604.com/2011 和 https://199604.com/2039

添加创建普通用户

为了安全起见,能不使用 root 账号操作就不使用,因此要添加专用的账号进行 docker 操作。

需要在每个节点重复该操作添加账号

创建 rancher 用户,添加到 docker 组

useradd rancher

usermod -aG docker rancher

passwd rancher

RKE所在主机上创建密钥

RKE所在主机-master01创建ssh-key,执行

#生成的密钥

ssh-keygen

#将所生成的密钥的公钥分发到各个节点

ssh-copy-id rancher@master01

ssh-copy-id rancher@node01

ssh-copy-id rancher@etcd01

重启每台机器

最好重启一下机器。以免带来不必要的麻烦

部署集群

RKE工具下载

具体最新版本可看github

wget https://github.com/rancher/rke/releases/download/v1.3.14/rke_linux-amd64

cp rke_linux-amd64 /usr/local/bin/

mv /usr/local/bin/rke_linux-amd64 /usr/local/bin/rke

chmod +x /usr/local/bin/rke

ln -s /usr/local/bin/rke /usr/bin/rke

rke -v

#rke version v1.3.14

初始化配置文件

mkdir /data/rancher

cd /data/rancher

rke config --name cluster.yml

按照提示输入即可

[root@master01 rancher]# rke config --name cluster.yml

[+] Cluster Level SSH Private Key Path [~/.ssh/id_rsa]: # 使用~/.ssh/id_rsa

[+] Number of Hosts [1]: 3 # 集群主机个数

[+] SSH Address of host (1) [none]: 10.80.211.180 #主机1地址

[+] SSH Port of host (1) [22]: # SSH端口

[+] SSH Private Key Path of host (10.80.211.180) [none]: ~/.ssh/id_rsa #使用~/.ssh/id_rsa

[+] SSH User of host (10.80.211.180) [ubuntu]: rancher #SSH用户名,这里使用rancher

[+] Is host (10.80.211.180) a Control Plane host (y/n)? [y]: y #是否运行Control Plane

[+] Is host (10.80.211.180) a Worker host (y/n)? [n]: n #是否是worker

[+] Is host (10.80.211.180) an etcd host (y/n)? [n]: n #是否运行etcd

[+] Override Hostname of host (10.80.211.180) [none]: #是否重设hostname

[+] Internal IP of host (10.80.211.180) [none]: # 主机内部IP

[+] Docker socket path on host (10.80.211.180) [/var/run/docker.sock]: # docker sock路径,使用默认

[+] SSH Address of host (2) [none]: 10.80.211.181 #主机2的配置,后续配置相同,不再赘述

[+] SSH Port of host (2) [22]:

[+] SSH Private Key Path of host (10.80.211.181) [none]: ~/.ssh/id_rsa

[+] SSH User of host (10.80.211.181) [ubuntu]: rancher

[+] Is host (10.80.211.181) a Control Plane host (y/n)? [y]: n

[+] Is host (10.80.211.181) a Worker host (y/n)? [n]: y

[+] Is host (10.80.211.181) an etcd host (y/n)? [n]: n

[+] Override Hostname of host (10.80.211.181) [none]:

[+] Internal IP of host (10.80.211.181) [none]:

[+] Docker socket path on host (10.80.211.181) [/var/run/docker.sock]:

[+] SSH Address of host (3) [none]: 10.80.211.182

[+] SSH Port of host (3) [22]:

[+] SSH Private Key Path of host (10.80.211.182) [none]: ~/.ssh/id_rsa

[+] SSH User of host (10.80.211.182) [ubuntu]: rancher

[+] Is host (10.80.211.182) a Control Plane host (y/n)? [y]: n

[+] Is host (10.80.211.182) a Worker host (y/n)? [n]: n

[+] Is host (10.80.211.182) an etcd host (y/n)? [n]: y

[+] Override Hostname of host (10.80.211.182) [none]:

[+] Internal IP of host (10.80.211.182) [none]:

[+] Docker socket path on host (10.80.211.182) [/var/run/docker.sock]:

[+] Network Plugin Type (flannel, calico, weave, canal, aci) [canal]: #网络插件类型

[+] Authentication Strategy [x509]: #认证策略

[+] Authorization Mode (rbac, none) [rbac]: #认证模式

[+] Kubernetes Docker image [rancher/hyperkube:v1.24.4-rancher1]: rancher/hyperkube:v1.21.14-rancher1 #k8s镜像名

[+] Cluster domain [cluster.local]: #集群域名

[+] Service Cluster IP Range [10.43.0.0/16]: #集群内服务IP的范围

[+] Enable PodSecurityPolicy [n]: #pod安全策略

[+] Cluster Network CIDR [10.42.0.0/16]: #集群网络范围

[+] Cluster DNS Service IP [10.43.0.10]: #集群DNS的IP

[+] Add addon manifest URLs or YAML files [no]: #是否增加插件manifest URL或配置文件

然后会在当前目录下生成一个cluster.yml的文件

# ls

cluster.yml

当然这个文件我们也可以自己定义,只需要格式保持一致,如:

[root@master01 rancher]# cat cluster.yml

# If you intended to deploy Kubernetes in an air-gapped environment,

# please consult the documentation on how to configure custom RKE images.

nodes:

- address: 10.80.211.180

port: "22"

internal_address: ""

role:

- controlplane

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 10.80.211.181

port: "22"

internal_address: ""

role:

- worker

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 10.80.211.182

port: "22"

internal_address: ""

role:

- etcd

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

services:

etcd:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

external_urls: []

ca_cert: ""

cert: ""

key: ""

path: ""

uid: 0

gid: 0

snapshot: null

retention: ""

creation: ""

backup_config: null

kube-api:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

service_cluster_ip_range: 10.43.0.0/16

service_node_port_range: ""

pod_security_policy: false

always_pull_images: false

secrets_encryption_config: null

audit_log: null

admission_configuration: null

event_rate_limit: null

kube-controller:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

cluster_cidr: 10.42.0.0/16

service_cluster_ip_range: 10.43.0.0/16

scheduler:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

kubelet:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

cluster_domain: cluster.local

infra_container_image: ""

cluster_dns_server: 10.43.0.10

fail_swap_on: false

generate_serving_certificate: false

kubeproxy:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

network:

plugin: canal

options: {}

mtu: 0

node_selector: {}

update_strategy: null

tolerations: []

authentication:

strategy: x509

sans: []

webhook: null

addons: ""

addons_include: []

system_images:

etcd: rancher/mirrored-coreos-etcd:v3.5.4

alpine: rancher/rke-tools:v0.1.87

nginx_proxy: rancher/rke-tools:v0.1.87

cert_downloader: rancher/rke-tools:v0.1.87

kubernetes_services_sidecar: rancher/rke-tools:v0.1.87

kubedns: rancher/mirrored-k8s-dns-kube-dns:1.21.1

dnsmasq: rancher/mirrored-k8s-dns-dnsmasq-nanny:1.21.1

kubedns_sidecar: rancher/mirrored-k8s-dns-sidecar:1.21.1

kubedns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.5

coredns: rancher/mirrored-coredns-coredns:1.9.3

coredns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.5

nodelocal: rancher/mirrored-k8s-dns-node-cache:1.21.1

kubernetes: rancher/hyperkube:v1.21.14-rancher1

flannel: rancher/mirrored-coreos-flannel:v0.15.1

flannel_cni: rancher/flannel-cni:v0.3.0-rancher6

calico_node: rancher/mirrored-calico-node:v3.22.0

calico_cni: rancher/calico-cni:v3.22.0-rancher1

calico_controllers: rancher/mirrored-calico-kube-controllers:v3.22.0

calico_ctl: rancher/mirrored-calico-ctl:v3.22.0

calico_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.22.0

canal_node: rancher/mirrored-calico-node:v3.22.0

canal_cni: rancher/calico-cni:v3.22.0-rancher1

canal_controllers: rancher/mirrored-calico-kube-controllers:v3.22.0

canal_flannel: rancher/mirrored-flannelcni-flannel:v0.17.0

canal_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.22.0

weave_node: weaveworks/weave-kube:2.8.1

weave_cni: weaveworks/weave-npc:2.8.1

pod_infra_container: rancher/mirrored-pause:3.6

ingress: rancher/nginx-ingress-controller:nginx-1.2.1-rancher1

ingress_backend: rancher/mirrored-nginx-ingress-controller-defaultbackend:1.5-rancher1

ingress_webhook: rancher/mirrored-ingress-nginx-kube-webhook-certgen:v1.1.1

metrics_server: rancher/mirrored-metrics-server:v0.6.1

windows_pod_infra_container: rancher/mirrored-pause:3.6

aci_cni_deploy_container: noiro/cnideploy:5.2.3.2.1d150da

aci_host_container: noiro/aci-containers-host:5.2.3.2.1d150da

aci_opflex_container: noiro/opflex:5.2.3.2.1d150da

aci_mcast_container: noiro/opflex:5.2.3.2.1d150da

aci_ovs_container: noiro/openvswitch:5.2.3.2.1d150da

aci_controller_container: noiro/aci-containers-controller:5.2.3.2.1d150da

aci_gbp_server_container: noiro/gbp-server:5.2.3.2.1d150da

aci_opflex_server_container: noiro/opflex-server:5.2.3.2.1d150da

ssh_key_path: ~/.ssh/id_rsa

ssh_cert_path: ""

ssh_agent_auth: false

authorization:

mode: rbac

options: {}

ignore_docker_version: null

enable_cri_dockerd: null

kubernetes_version: ""

private_registries: []

ingress:

provider: ""

options: {}

node_selector: {}

extra_args: {}

dns_policy: ""

extra_envs: []

extra_volumes: []

extra_volume_mounts: []

update_strategy: null

http_port: 0

https_port: 0

network_mode: ""

tolerations: []

default_backend: null

default_http_backend_priority_class_name: ""

nginx_ingress_controller_priority_class_name: ""

default_ingress_class: null

cluster_name: ""

cloud_provider:

name: ""

prefix_path: ""

win_prefix_path: ""

addon_job_timeout: 0

bastion_host:

address: ""

port: ""

user: ""

ssh_key: ""

ssh_key_path: ""

ssh_cert: ""

ssh_cert_path: ""

ignore_proxy_env_vars: false

monitoring:

provider: ""

options: {}

node_selector: {}

update_strategy: null

replicas: null

tolerations: []

metrics_server_priority_class_name: ""

restore:

restore: false

snapshot_name: ""

rotate_encryption_key: false

dns: null

如果后面需要部署kubeflow或istio则一定要配置以下参数:

kube-api:

kube-api: image: "" extra_args: # 如果后面需要部署kubeflow或istio则一定要配置以下参数 service-account-issuer: "kubernetes.default.svc" service-account-signing-key-file: "/etc/kubernetes/ssl/kube-service-account-token-key.pem"kube-controller:

kube-controller: image: "" extra_args: # 如果后面需要部署kubeflow或istio则一定要配置以下参数 cluster-signing-cert-file: "/etc/kubernetes/ssl/kube-ca.pem" cluster-signing-key-file: "/etc/kubernetes/ssl/kube-ca-key.pem"

部署集群

执行命令

rke up

# rke up

.....

INFO[0643] [worker] Successfully started Worker Plane..

INFO[0643] Image [rancher/rke-tools:v0.1.87] exists on host [10.80.211.181]

INFO[0643] Image [rancher/rke-tools:v0.1.87] exists on host [10.80.211.180]

INFO[0643] Image [rancher/rke-tools:v0.1.87] exists on host [10.80.211.182]

INFO[0643] Starting container [rke-log-cleaner] on host [10.80.211.180], try #1

INFO[0643] Starting container [rke-log-cleaner] on host [10.80.211.182], try #1

INFO[0643] Starting container [rke-log-cleaner] on host [10.80.211.181], try #1

INFO[0643] [cleanup] Successfully started [rke-log-cleaner] container on host [10.80.211.182]

INFO[0643] Removing container [rke-log-cleaner] on host [10.80.211.182], try #1

INFO[0643] [cleanup] Successfully started [rke-log-cleaner] container on host [10.80.211.180]

INFO[0643] Removing container [rke-log-cleaner] on host [10.80.211.180], try #1

INFO[0644] [remove/rke-log-cleaner] Successfully removed container on host [10.80.211.180]

INFO[0644] [cleanup] Successfully started [rke-log-cleaner] container on host [10.80.211.181]

INFO[0644] [remove/rke-log-cleaner] Successfully removed container on host [10.80.211.182]

INFO[0644] Removing container [rke-log-cleaner] on host [10.80.211.181], try #1

INFO[0644] [remove/rke-log-cleaner] Successfully removed container on host [10.80.211.181]

INFO[0644] [sync] Syncing nodes Labels and Taints

INFO[0644] [sync] Successfully synced nodes Labels and Taints

INFO[0644] [network] Setting up network plugin: canal

INFO[0644] [addons] Saving ConfigMap for addon rke-network-plugin to Kubernetes

INFO[0644] [addons] Successfully saved ConfigMap for addon rke-network-plugin to Kubernetes

INFO[0644] [addons] Executing deploy job rke-network-plugin

INFO[0684] [addons] Setting up coredns

INFO[0684] [addons] Saving ConfigMap for addon rke-coredns-addon to Kubernetes

INFO[0684] [addons] Successfully saved ConfigMap for addon rke-coredns-addon to Kubernetes

INFO[0684] [addons] Executing deploy job rke-coredns-addon

INFO[0689] [addons] CoreDNS deployed successfully

INFO[0689] [dns] DNS provider coredns deployed successfully

INFO[0689] [addons] Setting up Metrics Server

INFO[0689] [addons] Saving ConfigMap for addon rke-metrics-addon to Kubernetes

INFO[0689] [addons] Successfully saved ConfigMap for addon rke-metrics-addon to Kubernetes

INFO[0689] [addons] Executing deploy job rke-metrics-addon

INFO[0694] [addons] Metrics Server deployed successfully

INFO[0694] [ingress] Setting up nginx ingress controller

INFO[0694] [ingress] removing admission batch jobs if they exist

INFO[0694] [addons] Saving ConfigMap for addon rke-ingress-controller to Kubernetes

INFO[0694] [addons] Successfully saved ConfigMap for addon rke-ingress-controller to Kubernetes

INFO[0694] [addons] Executing deploy job rke-ingress-controller

INFO[0699] [ingress] removing default backend service and deployment if they exist

INFO[0699] [ingress] ingress controller nginx deployed successfully

INFO[0699] [addons] Setting up user addons

INFO[0699] [addons] no user addons defined

INFO[0699] Finished building Kubernetes cluster successfully

如果报错则进一步排查。可以往下拉错误集那有没有和我遇到的错误一致

执行成功后当前目录下将会多出以下文件

[root@master01 rancher]# ls

cluster.rkestate cluster.yml kube_config_cluster.yml

使用kubectl命令查看集群

下载kubectl命令工具

具体最新版本可看github

wget https://storage.googleapis.com/kubernetes-release/release/v1.21.14/bin/linux/amd64/kubectl

chmod +x kubectl

mv kubectl /usr/local/bin/

ln -s /usr/local/bin/kubectl /usr/bin/kubectl

kubectl version --client

配置连接文件

mkdir ~/.kube

cd /data/rancher

cp kube_config_cluster.yml /root/.kube/

mv /root/.kube/kube_config_cluster.yml /root/.kube/config

查看集群状态

[root@master01 rancher]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.80.211.180 Ready controlplane 14h v1.21.14

10.80.211.181 Ready worker 14h v1.21.14

10.80.211.182 Ready etcd 14h v1.21.14

[root@master01 rancher]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

集群更新

添加或删除 worker节点

RKE 支持为worker和 controlplane主机添加或删除节点。

可以通过修改cluster.yml文件的内容,添加额外的节点,并指定它们在 Kubernetes 集群中的角色;或从cluster.yml中的节点列表中删除节点信息,以达到删除节点的目的。

通过运行rke up --update-only,您可以运行rke up --update-only命令,只添加或删除工作节点。这将会忽略除了cluster.yml中的工作节点以外的其他内容。

使用–update-only添加或删除 worker 节点时,可能会触发插件或其他组件的重新部署或更新。

添加一台节点环境也需要一致。安装docker, 创建用户,关闭swap等

集群控制web端部署

rancher控制面板主要方便用于控制k8s集群,查看集群状态,编辑集群等。

使用docker 方式启动一个rancher:

docker run -d --restart=unless-stopped --privileged --name rancher -p 80:80 -p 443:443 rancher/rancher:v2.5.16

然后访问ip:80端口

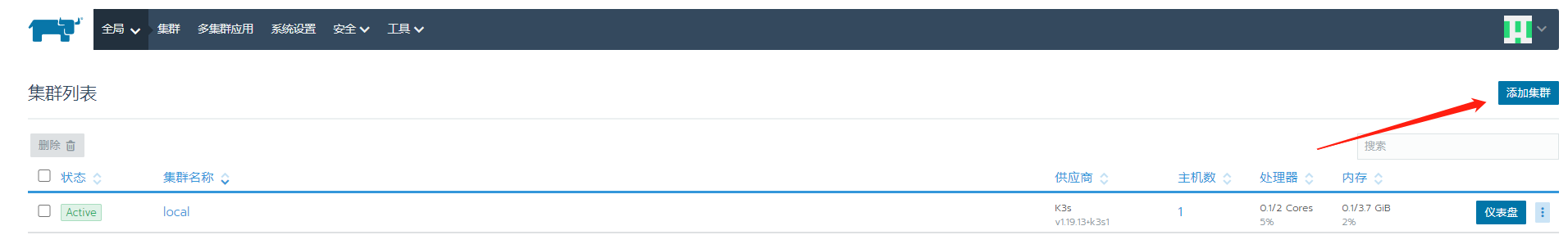

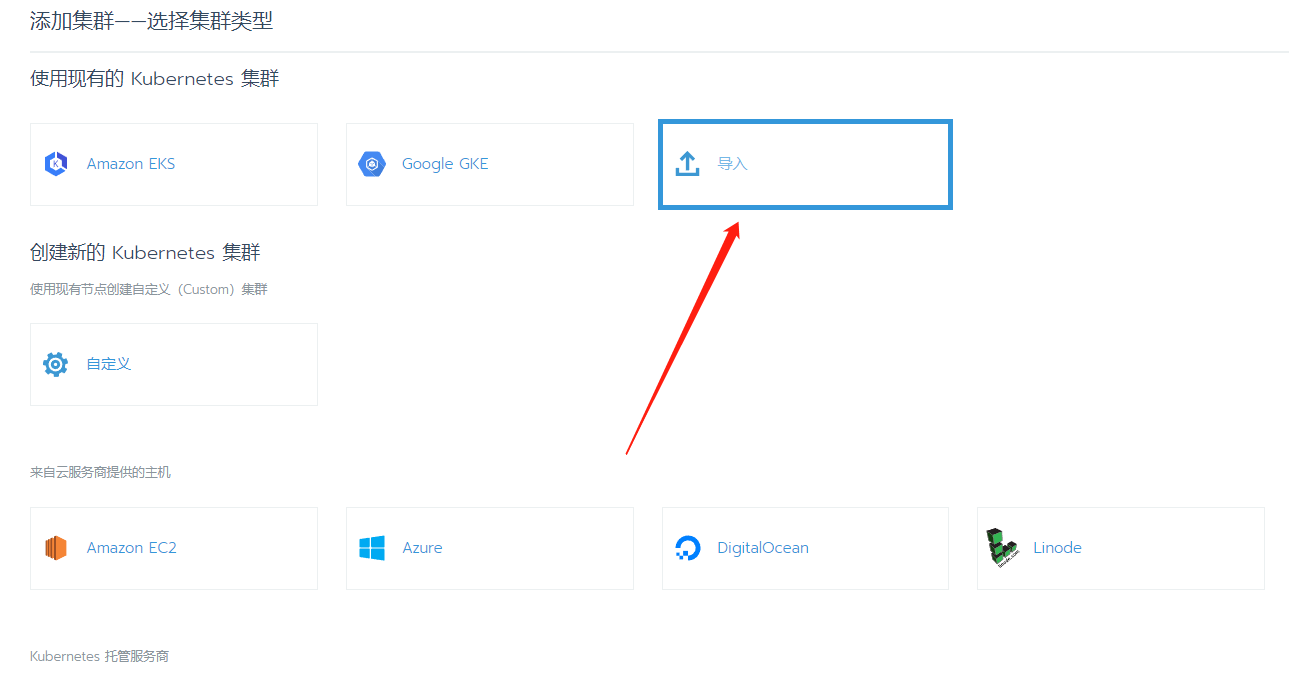

初始化一个用户后登录到界面,添加集群

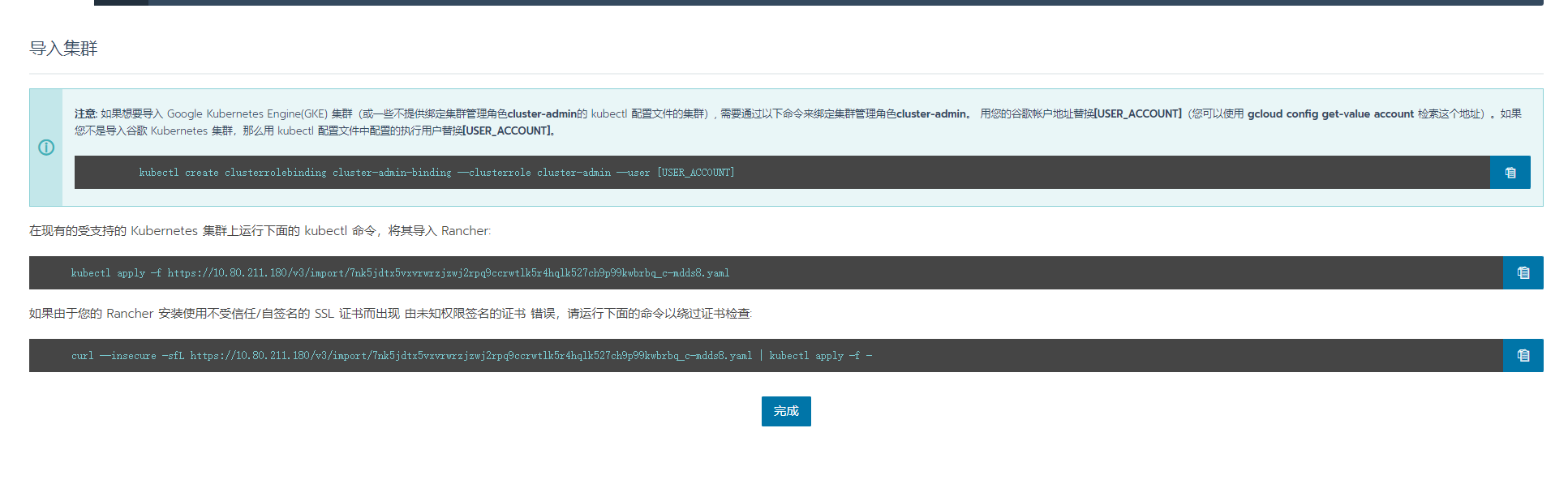

然后将所提示的命令到master01上执行

提示证书错误

kubectl apply -f https://10.80.211.180/v3/import/7nk5jdtx5vxvrwrzjzwj2rpq9ccrwtlk5r4hqlk527ch9p99kwbrbq_c-mdds8.yaml

—>Unable to connect to the server: x509: certificate is valid for 127.0.0.1, 172.17.0.2

则执行另外一条

curl --insecure -sfL https://10.80.211.180/v3/import/7nk5jdtx5vxvrwrzjzwj2rpq9ccrwtlk5r4hqlk527ch9p99kwbrbq_c-mdds8.yaml | kubectl apply -f -

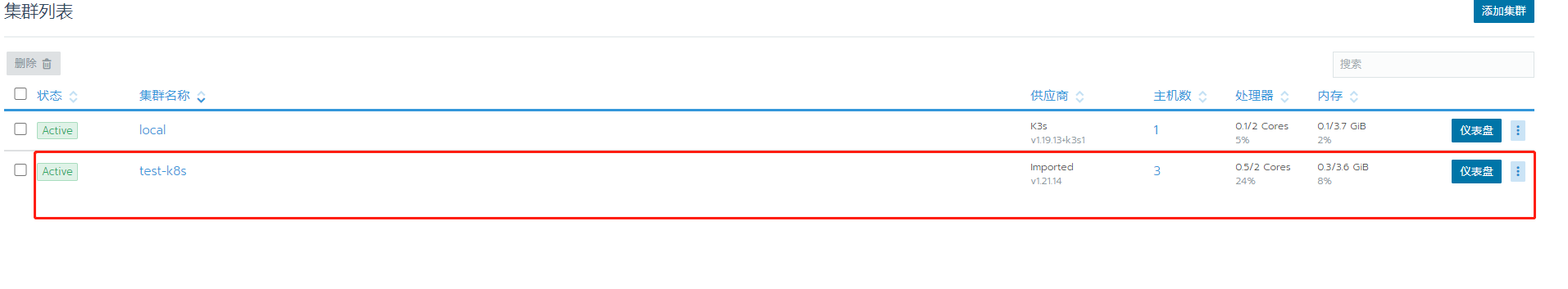

添加成功后

文章参考:https://zhuanlan.zhihu.com/p/425139452

视频参考:https://www.bilibili.com/video/BV1aa411G7dW?is_story_h5=false&p=1&share_from=ugc&share_medium=iphone&share_plat=ios&share_session_id=7C8FC235-2C79-4369-A42C-9FAD879348E5&share_source=WEIXIN&share_tag=s_i×tamp=1663514093&unique_k=ypS5fDk