利用两台服务器搭建SRS伪集群-手摸手记录

前提

为什么是只有两台呢?因为一直在调研,客户不可能会给6-8台服务器你去搭建SRS集群,所以只能利用最小的资源去验证可行性~手摸手教学,如果有用的话记得给我发个小红包吧(在线乞讨)

| 设备ip | 功能 | 备注 |

|---|---|---|

| 10.80.210.103 | 源站+边缘+(nginx+keepalive-主) | 端口: 源站集群: rtmp:11935 http_api:11985 边缘集群 rtmp:21935 http_api:21985 http_server:28080 vip:10.80.210.105 nginx使用端口: tcp: 1935–> 源站 rtmp 1985–> 源站 http_api 1936–> 边缘站 rtmp 1986–> 边缘站 http_api http: |

| 10.80.210.104 | 源站+边缘+(nginx+keepalive-从) | 端口: 源站集群: rtmp:11935 http_api:11985 边缘集群 rtmp:21935 http_api:21985 http_server:28080 vip:10.80.210.105 tcp: 1935–> 源站 rtmp 1985–> 源站 http_api 1936–> 边缘站 rtmp 1986–> 边缘站 http_api http: |

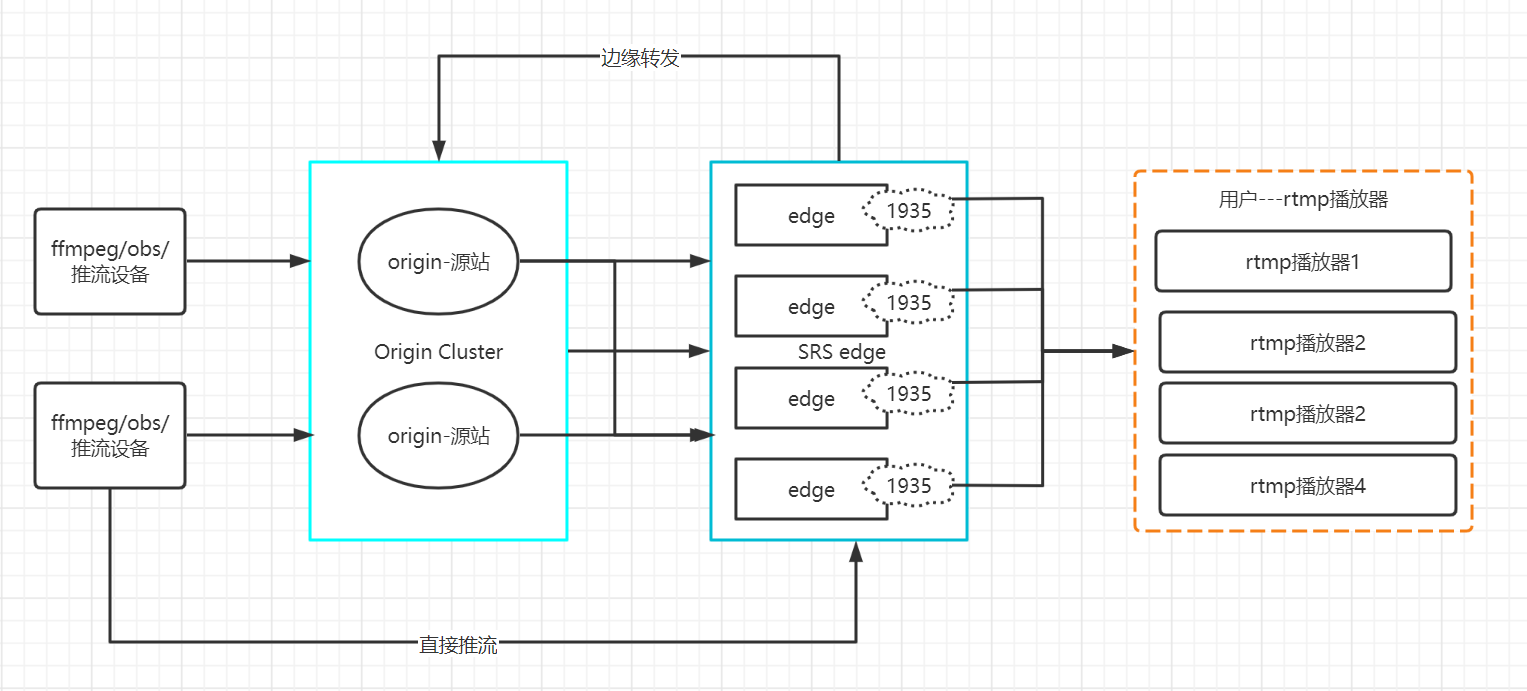

目标就是服务器既做源站也做边缘,从而实现高可用…

过程

安装基于SRS4.0服务省略..

源站集群配置

源站集群使用端口说明:

rtmp:11935http api:11985

10.80.210.103

进入cd /usr/local/srs/conf目录创建origin.cluster.conf 配置文件

# main config for srs.

# @see full.conf for detail config.

#监听的流端口

listen 11935;

#最大连接数

max_connections 1000;

pid ./objs/origin_cluster.pid;

srs_log_tank file;

#srs_log_level trace;

srs_log_level warn;

srs_log_file ./objs/origin.cluster.log;

daemon on;

http_api {

enabled on;

listen 11985;

}

vhost __defaultVhost__ {

hls {

enabled on;

hls_fragment 10;

hls_window 60;

hls_path ./objs/nginx/html;

hls_m3u8_file [app]/[stream].m3u8;

hls_ts_file [app]/[stream]-[seq].ts;

}

http_remux {

enabled on;

mount [vhost]/[app]/[stream].flv;

}

cluster {

#集群的模式,对于源站集群,值应该是local。

mode local;

#是否开启源站集群

origin_cluster on;

# 源站集群中的其他源站的HTTP API地址

coworkers 10.80.210.104:11985;

}

}

10.80.210.104

同103配置内容,但差异就是 coworkers 那边需要指向103

# main config for srs.

# @see full.conf for detail config.

#监听的流端口

listen 11935;

#最大连接数

max_connections 1000;

pid ./objs/origin_cluster.pid;

srs_log_tank file;

#srs_log_level trace;

srs_log_level warn;

srs_log_file ./objs/origin.cluster.log;

daemon on;

http_api {

enabled on;

listen 11985;

}

vhost __defaultVhost__ {

hls {

enabled on;

hls_fragment 10;

hls_window 60;

hls_path ./objs/nginx/html;

hls_m3u8_file [app]/[stream].m3u8;

hls_ts_file [app]/[stream]-[seq].ts;

}

http_remux {

enabled on;

mount [vhost]/[app]/[stream].flv;

}

cluster {

#集群的模式,对于源站集群,值应该是local。

mode local;

#是否开启源站集群

origin_cluster on;

# 源站集群中的其他源站的HTTP API地址

coworkers 10.80.210.103:11985;

}

}

测试启动

双机执行:cd /usr/local/srs && ./objs/srs -c ./conf/origin.cluster.conf

[root@srs104 init.d]# cd /usr/local/srs && ./objs/srs -c ./conf/origin.cluster.conf

[2022-07-05 23:12:39.561][Trace][14314][9x69f9uw] XCORE-SRS/4.0.253(Leo)

[2022-07-05 23:12:39.561][Trace][14314][9x69f9uw] config parse complete

[2022-07-05 23:12:39.561][Trace][14314][9x69f9uw] you can check log by: tail -n 30 -f ./objs/origin.cluster.log

[2022-07-05 23:12:39.561][Trace][14314][9x69f9uw] please check SRS by: ./etc/init.d/srs status

[root@srs104 srs]#

[root@srs104 srs]# netstat -nltp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:11985 0.0.0.0:* LISTEN 14316/./objs/srs

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1467/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 2123/master

tcp 0 0 0.0.0.0:11935 0.0.0.0:* LISTEN 14316/./objs/srs

tcp6 0 0 :::22 :::* LISTEN 1467/sshd

tcp6 0 0 ::1:25

源站集群SRS作为系统服务启动

复制/usr/local/srs/etc/init.d/srs为/usr/local/srs/etc/init.d/srs.origin.cluster

主要替换内容:

ROOT="/usr/local/srs"

APP="./objs/srs"

CONFIG="conf/origin.cluster.conf"

DEFAULT_PID_FILE='./objs/origin_cluster.pid'

DEFAULT_LOG_FILE='./objs/origin.cluster.log'

复制/usr/local/srs/usr/lib/systemd/system/srs.service为/usr/local/srs/usr/lib/systemd/system/srs.origin.cluster.service

主要替换内容:

Description=srs.origin.cluster

After=network.target

[Service]

Type=forking

Restart=always

ExecStart=/etc/init.d/srs.origin.cluster start

ExecReload=/etc/init.d/srs.origin.cluster reload

ExecStop=/etc/init.d/srs.origin.cluster stop

[Install]

WantedBy=multi-user.target

systemctl服务开机自启动

ln -sf /usr/local/srs/etc/init.d/srs.origin.cluster /etc/init.d/srs.origin.cluster

/etc/init.d/srs.origin.cluster stop

cp -f /usr/local/srs/usr/lib/systemd/system/srs.origin.cluster.service /usr/lib/systemd/system/srs.origin.cluster.service

systemctl daemon-reload

systemctl enable srs.origin.cluster

边缘集群配置

源站集群使用端口说明:

rtmp:21935- “http api:21985`

http server:28080

10.80.210.103和10.80.210.104配置

进入cd /usr/local/srs/conf目录创建edge.cluster.conf 配置文件

# main config for srs.

# @see full.conf for detail config.

#监听的流端口

listen 21935;

#最大连接数

max_connections 1000;

pid ./objs/edge_cluster.pid;

srs_log_tank file;

#srs_log_level trace;

srs_log_level warn;

srs_log_file ./objs/edge.cluster.log;

daemon on;

http_api {

enabled on;

listen 21985;

}

http_server {

enabled on;

listen 28080;

dir ./objs/nginx/html;

}

rtc_server {

enabled on;

listen 8000; # UDP port

# @see https://github.com/ossrs/srs/wiki/v4_CN_WebRTC#config-candidate

candidate $CANDIDATE;

}

vhost __defaultVhost__ {

hls {

enabled on;

hls_fragment 10;

hls_window 60;

hls_path ./objs/nginx/html;

hls_m3u8_file [app]/[stream].m3u8;

hls_ts_file [app]/[stream]-[seq].ts;

}

http_remux {

enabled on;

mount [vhost]/[app]/[stream].flv;

hstrs on;

}

dvr {

enabled on;

dvr_apply all;

#dvr计划

dvr_plan segment;

#录制的路径

# dvr_path ./objs/nginx/html/[app]/[stream].[timestamp].flv;

dvr_path ./objs/nginx/html/[app]/[stream]/[2006]/[01]/[02]/[15].[04].[05].[999].flv;

#segment方式录制时间设置,单位:seconds

dvr_duration 300;

#开启按关键帧且flv

dvr_wait_keyframe on;

#时间戳抖动算法。full使用完全的时间戳矫正;

#zero只是保证从0开始;off不矫正时间戳。

time_jitter full;

}

cluster {

#集群的模式,对于边缘集群,remote。

mode remote;

#源站集群中的其他源站的HTTP API地址---转到vip是否可行?

origin 10.80.210.103:11935 10.80.210.104:11935;

}

}

测试启动

双机执行:cd /usr/local/srs && ./objs/srs -c ./conf/edge.cluster.conf

边缘集群SRS作为系统服务启动

和源站的大同小异啦

复制/usr/local/srs/etc/init.d/srs为/usr/local/srs/etc/init.d/srs.edge.cluster

主要替换内容:

ROOT="/usr/local/srs"

APP="./objs/srs"

CONFIG="conf/edge.cluster.conf"

DEFAULT_PID_FILE='./objs/edge_cluster.pid'

DEFAULT_LOG_FILE='./objs/edge.cluster.log'

复制/usr/local/srs/usr/lib/systemd/system/srs.service为/usr/local/srs/usr/lib/systemd/system/srs.edge.cluster.service

主要替换内容:

Description=srs.edge.cluster

After=network.target

[Service]

Type=forking

Restart=always

ExecStart=/etc/init.d/srs.edge.cluster start

ExecReload=/etc/init.d/srs.edge.cluster reload

ExecStop=/etc/init.d/srs.edge.cluster stop

[Install]

WantedBy=multi-user.target

systemctl服务开机自启动

ln -sf /usr/local/srs/etc/init.d/srs.edge.cluster /etc/init.d/srs.edge.cluster

/etc/init.d/srs.edge.cluster stop

cp -f /usr/local/srs/usr/lib/systemd/system/srs.edge.cluster.service /usr/lib/systemd/system/srs.edge.cluster.service

systemctl daemon-reload

systemctl enable srs.edge.cluster

nginx安装和配置,主从服务都需要安装

安装版本为:nginx-1.22.0-1.el7.ngx.x86_64.rpm

nginx配置

vi /etc/nginx/nginx.conf 新增如下信息:

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

stream {

# 添加socket转发的代理

upstream origin_rtmp_proxy {

hash $remote_addr consistent;

# 转发的目的地址和端口

server 10.80.210.103:11935 weight=5 max_fails=3 fail_timeout=30s;

server 10.80.210.104:11935 weight=5 max_fails=3 fail_timeout=30s;

}

# 提供转发的服务,即访问localhost:1935,会跳转至代理origin_rtmp_proxy指定的转发地址

server {

listen 1935;

proxy_connect_timeout 1s;

proxy_timeout 3s;

proxy_pass origin_rtmp_proxy;

}

upstream origin_http_api_proxy {

hash $remote_addr consistent;

# 转发的目的地址和端口

server 10.80.210.103:11985 weight=5 max_fails=3 fail_timeout=30s;

server 10.80.210.104:11985 weight=5 max_fails=3 fail_timeout=30s;

}

server {

listen 1985;

proxy_connect_timeout 1s;

proxy_timeout 3s;

proxy_pass origin_http_api_proxy;

}

upstream edge_rtmp_proxy {

hash $remote_addr consistent;

# 转发的目的地址和端口

server 10.80.210.103:21935 weight=5 max_fails=3 fail_timeout=30s;

server 10.80.210.104:21935 weight=5 max_fails=3 fail_timeout=30s;

}

server {

listen 1936;

proxy_connect_timeout 1s;

proxy_timeout 3s;

proxy_pass edge_rtmp_proxy;

}

upstream edge_http_api_proxy {

hash $remote_addr consistent;

# 转发的目的地址和端口

server 10.80.210.103:21985 weight=5 max_fails=3 fail_timeout=30s;

server 10.80.210.104:21985 weight=5 max_fails=3 fail_timeout=30s;

}

server {

listen 1986;

proxy_connect_timeout 1s;

proxy_timeout 3s;

proxy_pass edge_http_api_proxy;

}

}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

upstream srs{

server 10.80.210.103:28080;

server 10.80.210.104:28080;

}

server {

listen 80;

server_name localhost;

location ~ /* {

proxy_pass http://srs;

add_header Cache-Control no-cache;

add_header Access-Control-Allow-Origin *;

}

#禁止访问的文件或目录

location ~ ^/(\.user.ini|\.htaccess|\.git|\.svn|\.project|LICENSE|README.md)

{

return 404;

}

#一键申请SSL证书验证目录相关设置

location ~ \.well-known{

allow all;

}

location ~ .*\.(gif|jpg|jpeg|png|bmp|swf)$

{

expires 30d;

error_log /dev/null;

access_log /dev/null;

}

location ~ .*\.(js|css)?$

{

expires 12h;

error_log /dev/null;

access_log /dev/null;

}

}

}

keepalived安装和配置

安装keepalived

yum install -y keepalived

修改配置文件

cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

vi /etc/keepalived/keepalived.conf

103服务的配置文件信息:

global_defs {

# 路由id:当前安装keepalived的节点主机标识符,保证全局唯一

router_id keep_host103

#vrrp_skip_check_adv_addr

#vrrp_strict

#vrrp_garp_interval 0

#vrrp_gna_interval 0

}

vrrp_script check_web {

script "/etc/keepalived/check_web.sh" # 脚本存放的位置

interval 2 # 每隔两秒运行上一行脚本

weight -20 # 如果脚本运行成功,则升级权重-20,自动切换到备

}

vrrp_instance VI_1 {

# 主机=MASTER;备用机=BACKUP

state MASTER

# 该实例绑定的网卡名称

interface ens192

# 保证主备节点一致

virtual_router_id 51

# 权重,master值 > backup值

priority 100

# 主备组播报发送间隔时间1秒

advert_int 1

# 认证权限密码,防止非法节点进入

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟出来的ip,可以有多个(vip)

virtual_ipaddress {

10.80.210.105

}

# 调用监控脚本

track_script {

check_web

}

}

104服务的配置文件信息:

global_defs {

# 路由id:当前安装keepalived的节点主机标识符,保证全局唯一

router_id keep_host104

#vrrp_skip_check_adv_addr

#vrrp_strict

#vrrp_garp_interval 0

#vrrp_gna_interval 0

}

vrrp_script check_web {

script "/etc/keepalived/check_web.sh" # 脚本存放的位置

interval 2 # 每隔两秒运行上一行脚本

weight -20 # 如果脚本运行成功,则升级权重-20,自动切换到备

}

vrrp_instance VI_1 {

# 主机=MASTER;备用机=BACKUP

state BACKUP

# 该实例绑定的网卡名称

interface ens192

# 保证主备节点一致

virtual_router_id 51

# 权重,master值 > backup值

priority 88

# 主备组播报发送间隔时间1秒

advert_int 1

# 认证权限密码,防止非法节点进入

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟出来的ip,可以有多个(vip)

virtual_ipaddress {

10.80.210.105

}

# 调用监控脚本

track_script {

check_web

}

}

编写nginx监控脚本

如果nginx服务停止,keepalived服务也停止,并切换到备主机

脚本如下:vi /etc/keepalived/check_web.sh

#!/bin/bash

num=`ps -C nginx --no-header |wc -l`

if [ $num -eq 0 ]

then

systemctl restart nginx

sleep 10

num=`ps -C nginx --no-header |wc -l`

if [ $num -eq 0 ]

then

systemctl stop keepalived

fi

fi

授权:chmod +x /etc/keepalived/check_web.sh

启动、开机自启keepalived服务

systemctl start keepalived

systemctl enable keepalived

常说手把手教。手摸手教是不是太涩涩了。

哈哈哈哈~和手把手差不多啦只是自己一个搭建过程罢了