本文最后更新于 2001 天前,其中的信息可能已经有所发展或是发生改变。

使用环境CDH5.16.1

1.建立一个Hive管理Hbase表的外部表

下面是demo:

//切换到hive用户

sudo hive

create EXTERNAL table v2_mdt_grid_date_gh_cgi

(

key String,

cgi String,

gh String,

cnt_cm String,

cnt_ct String,

cnt_cu String,

cnt_nc String,

cnt_over_lap String,

cnt_rsrp_110 String,

cnt_rsrp_110_sinr_3 String,

cnt_rsrq String,

cnt_rsrq_15 String,

cnt_sc String,

cnt_sinr String,

cnt_sinr_0 String,

cnt_sinr_3 String,

over_lap_rate String,

sum_cmrsrp String,

sum_ctrsrp String,

sum_cursrp String,

sum_nc_rsrp String,

sum_rsrq String,

sum_sc_rsrp String,

sum_sinr String

)

row format delimited fields terminated by '|'

STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

WITH SERDEPROPERTIES("hbase.columns.mapping" =

"

:key,

attr:cgi,

attr:gh,

imm:cnt_cm,

imm:cnt_ct,

imm:cnt_cu,

imm:cnt_nc,

imm:cnt_over_lap,

imm:cnt_rsrp_110,

imm:cnt_rsrp_110_sinr_3,

imm:cnt_rsrq,

imm:cnt_rsrq_15,

imm:cnt_sc,

imm:cnt_sinr,

imm:cnt_sinr_0,

imm:cnt_sinr_3,

imm:over_lap_rate,

imm:sum_cmrsrp,

imm:sum_ctrsrp,

imm:sum_cursrp,

imm:sum_nc_rsrp,

imm:sum_rsrq,

imm:sum_sc_rsrp,

imm:sum_sinr")

TBLPROPERTIES("hbase.table.name" = "v2.mdt.grid.date_gh_cgi");

其中hive表名不能含义“.”,hive字段与hbase字段一一对应。

ps:

快速获取hbase表字段方法:

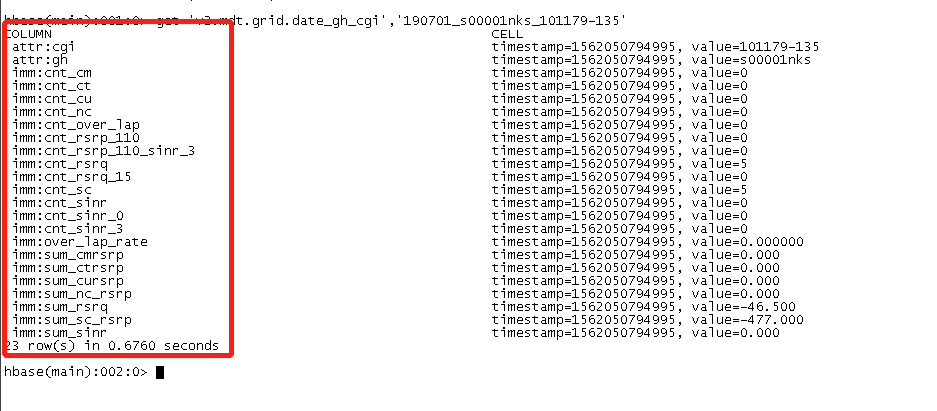

hbase shell

//获取所有hbase表

list

//查询某个表key

scan 'v2.mdt.grid.date_gh_cgi',{LIMIT=>1}

//根据key拿到对应的字段

get 'v2.mdt.grid.date_gh_cgi','190701_s00001nks_101179-135'

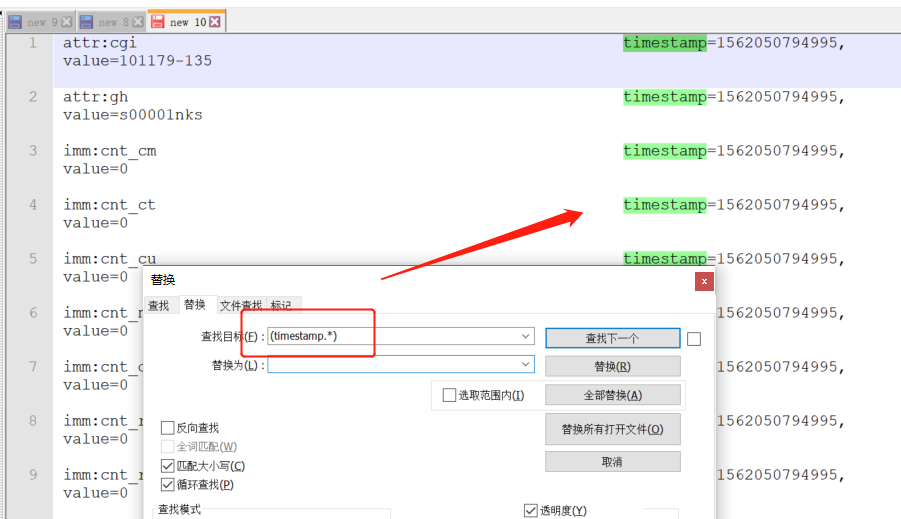

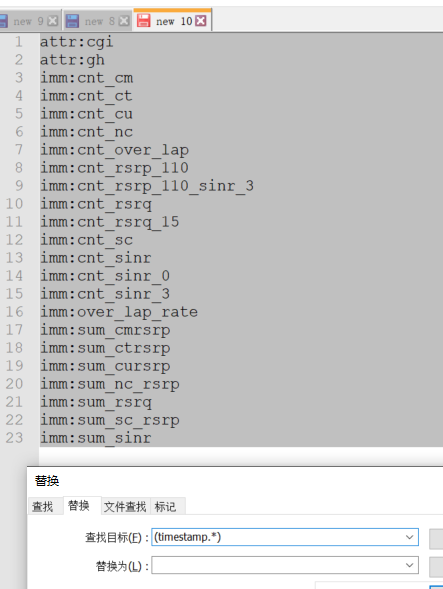

//如果遇到字段太多可退出执行:

echo "get 'v2.mdt.grid.date_gh_cgi','190701_s00001nks_101179-135'" | hbase shell > /home/xxx.txt

通过编辑器正则去除

2.检查是否创建表成功并且有了hbase表的数据:

sudo hive;

//连接hive

hive

#记得加分页不然后果很严重!!!!!!!!!

select * from v2_mdt_grid_date_gh_cgi limit 10;

在hive查询sql时记得加分页不然后果很严重!!!!!!!!!

3.导出数据到hdfs:

sudo hive;

//连接hive

hive

insert overwrite directory '/data1/output_glj'//导到hdfs那个位置。

row format delimited fields terminated by ','//分隔符 -->hvie默认列分隔符为:‘/001’

select * from v2_mdt_grid_date_gh_cgi limit 100;//(sql语句可以加自己的条件)

4.从HDFS导出数据到本地服务器:

getmerge命令是合并多个hdfs文件成一个

hadoop fs -getmerge /data1/output_glj/ /data1/output/glj_test.txt

参考:https://www.jianshu.com/p/86ff9d7459b7