GP&CDH安装部署系列-CDH5.16.1安装

安装文件

文件都放到/home/tools/

- jdk-8u131-linux-x64.rpm

- MySQL-5.6.31-1.linux_glibc2.5.x86_64.rpm-bundle.tar

- mysql-connector-java-5.1.36-bin.jar

- cloudera-manager-centos7-cm5.16.1_x86_64.tar.gz

- CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel

- CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha(.sha1)

- manifest.json

centos7为最小化安装,再安装 CDH前必须先安装如下程序包:(会有报错~每台都要执行)

yum install chkconfig python bind-utils psmisc libxslt zlib sqlite fuse fuse-libs RedHat-lsb cyrus-sasl-plain cyrus-sasl-gssapi autoconf -y

安装jdk(每台都需安装)

master主服务器执行文件传输到sdw

[root@mdw96 tools]# scp -r jdk-8u131-linux-x64.rpm root@10.227.17.97:/home/tools/

…..(省略)

查找安装过的java包

rpm -qa | grep java

如果查出来有 运行 rpm -e –nodeps 查出来的包名(将已安装java全部卸载)

安装java rpm包(每台机器执行)

rpm -ivh jdk-8u131-linux-x64.rpm

验证是否安装成功

java -version

or

which java

离线安装mysql5_6_31(只在mdw上安装)

解压mysql tar包

[root@mdw96 data]# cd tools/

[root@mdw96 data]# tar -xf MySQL-5.6.31-1.linux_glibc2.5.x86_64.rpm-bundle.tar

[root@mdw96 tools]# ls

- MySQL-devel-5.6.31-1.linux_glibc2.5.x86_64.rpm

- MySQL-shared-5.6.31-1.linux_glibc2.5.x86_64.rpm

- MySQL-5.6.31-1.linux_glibc2.5.x86_64.rpm-bundle.tar

- MySQL-embedded-5.6.31-1.linux_glibc2.5.x86_64.rpm

- MySQL-shared-compat-5.6.31-1.linux_glibc2.5.x86_64.rpm

- MySQL-client-5.6.31-1.linux_glibc2.5.x86_64.rpm

- MySQL-server-5.6.31-1.linux_glibc2.5.x86_64.rpm

- MySQL-test-5.6.31-1.linux_glibc2.5.x86_64.rpm

查找已经安装的卸载

rpm -qa | grep mysql

rpm -qa | grep mariadb

如果查出来有 运行 rpm -e –nodeps 查出来的包名(将已安装mysql全部卸载)

安装mysql rpm包

rpm -ivh MySQL-server-5.6.31-1.linux_glibc2.5.x86_64.rpm

启动mysql服务

service mysql start

安装mysql 客户端

rpm -ivh MySQL-client-5.6.31-1.linux_glibc2.5.x86_64.rpm

rpm -ivh MySQL-devel-5.6.31-1.linux_glibc2.5.x86_64.rpm

rpm -ivh MySQL-embedded-5.6.31-1.linux_glibc2.5.x86_64.rpm

rpm -ivh MySQL-shared-5.6.31-1.linux_glibc2.5.x86_64.rpm

rpm -ivh MySQL-shared-compat-5.6.31-1.linux_glibc2.5.x86_64.rpm

rpm -ivh MySQL-test-5.6.31-1.linux_glibc2.5.x86_64.rpm

安装完成,记住原始密码..

cat /root/.mysql_secret

# The random password set for the root user at Sat Apr 1 19:37:12 2020 (local time):

Cpb43u6T_D2qmg9M

登录mysql 修改密码并授权

mysql -u root -p mysql

Enter password: Cpb43u6T_D2qmg9M

设置mysql权限:

mysql> SET PASSWORD = PASSWORD('Sdcmcc@139.com');

Query OK, 0 rows affected (0.00 sec)

mysql> grant all on *.* to root@'%' identified by 'Sdcmcc@139.com';

Query OK, 0 rows affected (0.00 sec)

mysql> grant all on *.* to root@'127.0.0.1' identified by 'Sdcmcc@139.com';

Query OK, 0 rows affected (0.00 sec)

mysql> grant all on *.* to root@'localhost' identified by 'Sdcmcc@139.com';

Query OK, 0 rows affected (0.00 sec)

mysql> grant all on *.* to root@'mdw132' identified by 'Sdcmcc@139.com';

Query OK, 0 rows affected (0.00 sec)

mysql> grant all privileges on *.* to 'root'@'mdw132' identified by 'Sdcmcc@139.com' with grant option;

Query OK, 0 rows affected (0.00 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

mysql> quit

Bye

测试是否修改成功

[root@mdw96 tools]# mysql -u root -p

Enter password: Sdcmcc@139.com

mysql> show databases;

-> ;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| test |

+--------------------+

安装CDH(只在mdw上安装)

[root@mdw96 opt]# cd /home/tools/CDH-5.16.1-1.cdh5.16.1.p0.3

[root@mdw96]#cp cloudera-manager-centos7-cm5.16.1_x86_64.tar.gz /opt

[root@mdw96 tools]# cp mysql-connector-java-5.1.36-bin.jar /opt

[root@mdw96 tools]# cd /opt

[root@mdw96 opt]# tar -xf cloudera-manager-centos7-cm5.16.1_x86_64.tar.gz

[root@mdw96 opt]# ls

[root@mdw96 opt]# cp mysql-connector-java-5.1.36-bin.jar /opt/cm-5.16.1/share/cmf/lib/

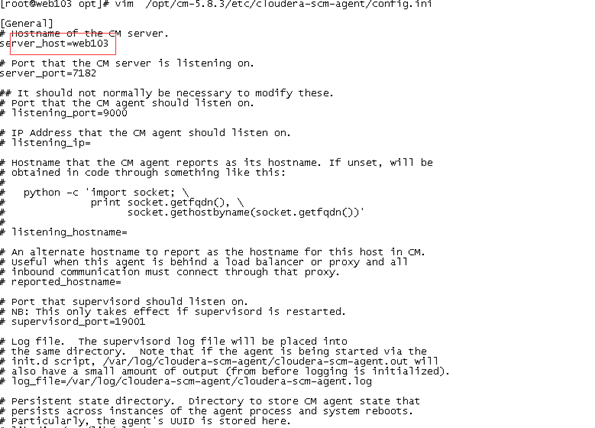

修改config.ini的server_host值

注意修改:server_host为实际主机名

[root@mdw96 opt]# cd /home/tools/

[root@mdw96 tools]# cd CDH-5.16.1-1.cdh5.16.1.p0.3/

[root@mdw96 CDH-5.16.1-1.cdh5.16.1.p0.3]# cp CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel /opt/cloudera/parcel-repo/

[root@mdw96 CDH-5.16.1-1.cdh5.16.1.p0.3]# cp CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha /opt/cloudera/parcel-repo/

[root@mdw96 CDH-5.16.1-1.cdh5.16.1.p0.3]# cp manifest.json /opt/cloudera/parcel-repo/

[root@mdw96 CDH-5.16.1-1.cdh5.16.1.p0.3]# cd /opt/cloudera/parcel-repo/

[root@mdw96 parcel-repo]# ls

CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel

CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha

manifest.json

当CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha的后缀是.sha1,需要改成.sha,不然到时候安装CDH还是会去下载文件…这是一个坑

在每台机器执行

useradd --system --home=/opt/cm-5.16.1/run/cloudera-scm-server/ --no-create-home --shell=/bin/false --comment "Cloudera SCM User" cloudera-scm

如是重装的CDH,需要到Mysql把cm数据库删除,第一次安装则忽略。

在master上执行

[root@mdw96 opt]# /opt/cm-5.16.1/share/cmf/schema/scm_prepare_database.sh mysql cm -hlocalhost -uroot -pSdcmcc@139.com --scm-host localhost scm scm scm

复制master机器的 /opt/cm-5.16.1 到sdw机器

scp -rp /opt/cm-5.16.1 root@sdw97:/opt/

scp -rp /opt/cm-5.16.1 root@sdw98:/opt/

scp -rp /opt/cm-5.16.1 root@sdw99:/opt/

....

开启服务前需要进行如下修改(每台机器上执行)

将 swappness修改为0,尽量不使用交换分区:

echo 0 > /proc/sys/vm/swappiness

echo never > /sys/kernel/mm/transparent_hugepage/defrag

cat >> /etc/sysctl.conf <<END

vm.swappiness=0

END

echo never > /sys/kernel/mm/transparent_hugepage/defrag

cat >> /etc/rc.local <<END

echo never > /sys/kernel/mm/transparent_hugepage/defrag

END

开启/停止服务:

1. master上开启服务

/opt/cm-5.16.1/etc/init.d/cloudera-scm-server start

/opt/cm-5.16.1/etc/init.d/cloudera-scm-agent start

2. segment上开启服务

/opt/cm-5.16.1/etc/init.d/cloudera-scm-agent start

同理关闭start–>stop

ps拓展:

停止CDH就是开启的相反步骤

1.先停止Cluster集群服务

2.停止CM服务

3.在各个节点上停止Agent

sudo /opt/cm-5.16.1/etc/init.d/cloudera-scm-agent stop

4.在主节点上停止Server

sudo /opt/cm-5.16.1/etc/init.d/cloudera-scm-server stop

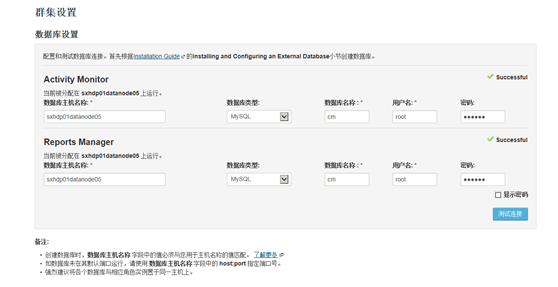

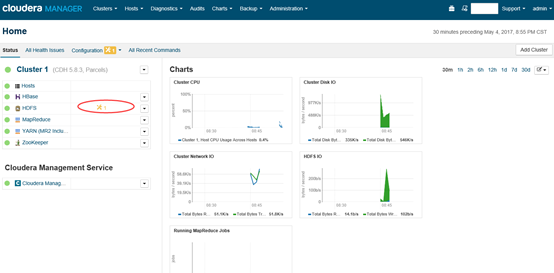

web页面配置

如下图:

master ip:7180/cmf

默认用户名密码:admin/admin

下面图片可能有点混乱因为几个省的服务器图片截图…噗

打开页面效果图

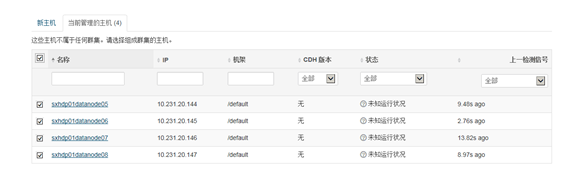

集群机器效果图

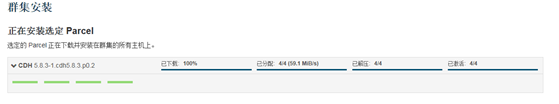

集群开始安装

集群安装中

parcel安装完成后反馈

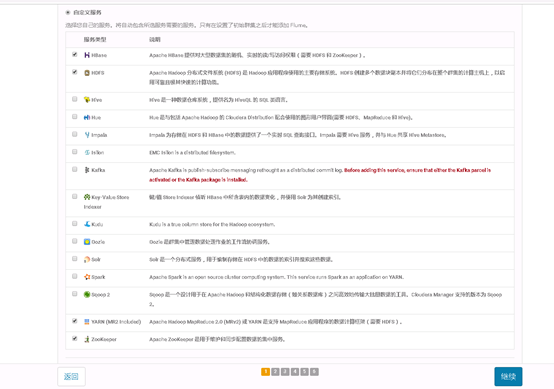

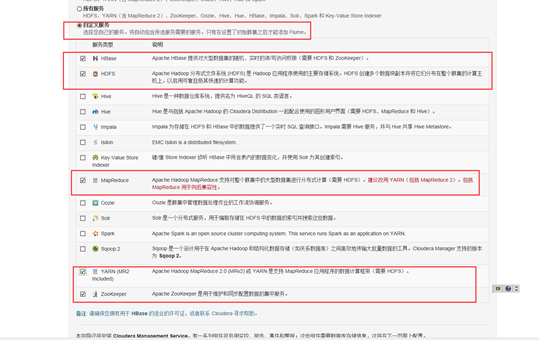

选择自定义安装服务!!!!!

新的版本没有mapreduce 注意不要选择hive

旧版:

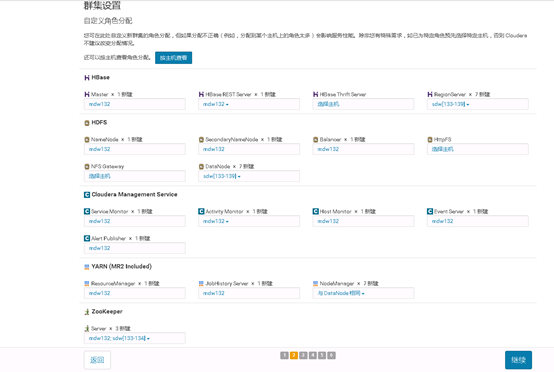

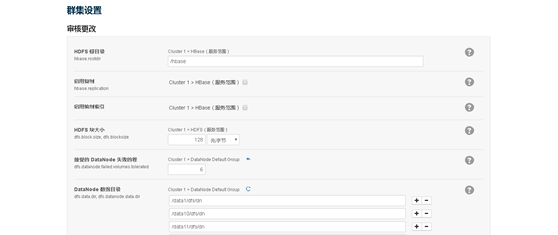

基本默认—>zookeeper修改为节点服务器,至少再添加2台机器

新:

新:

旧:

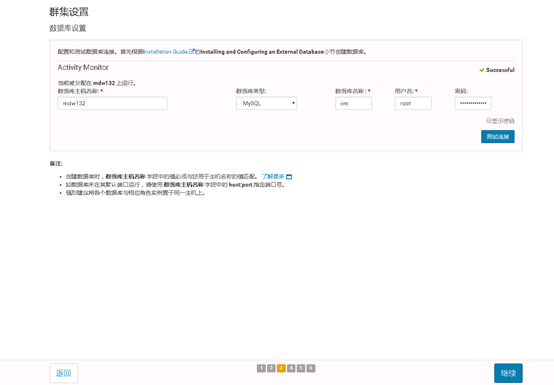

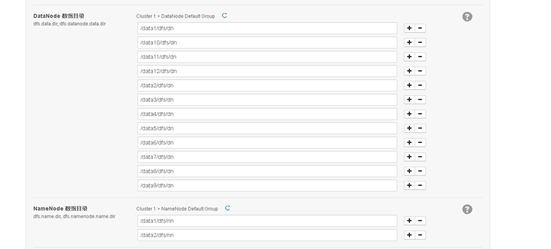

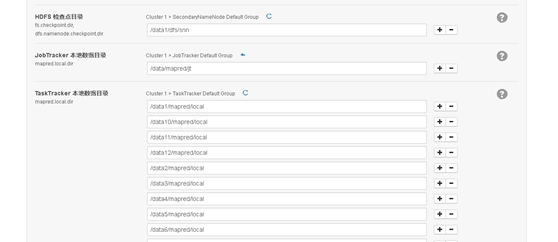

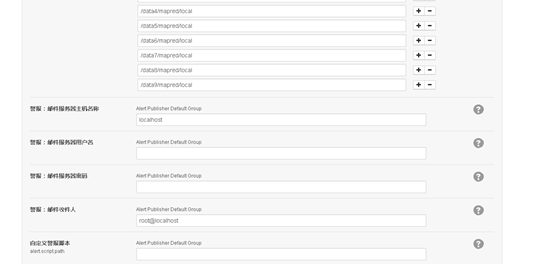

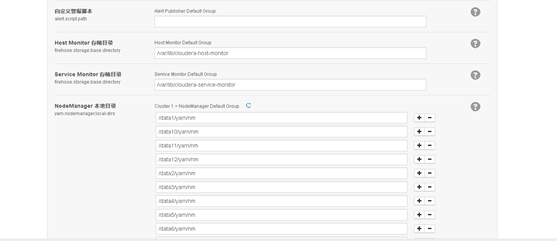

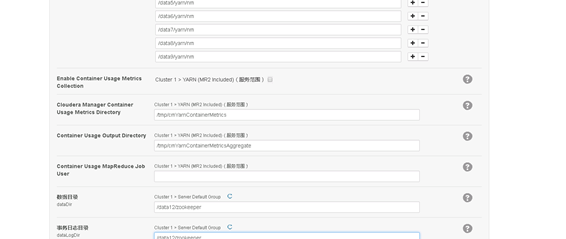

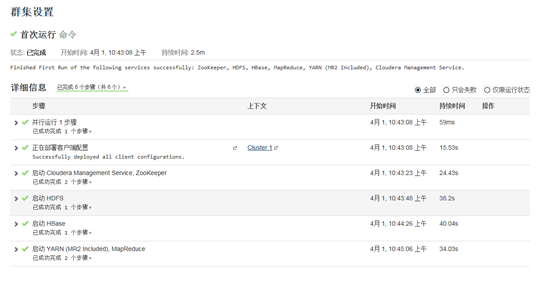

集群设置安装

基本保持默—>但是zookeepr修改个大点的文件夹,如:/data/zookeepr

集群初始化

ps:

屏蔽时钟告警

安装完成后,主页面会有红色告警,主要为时钟告警,可需通过web页面登录:master服务器 ip:7180

在主页面上端菜单选择主机 -> 配置 -> 搜索“时钟” -> 修改告警提示为“从不”

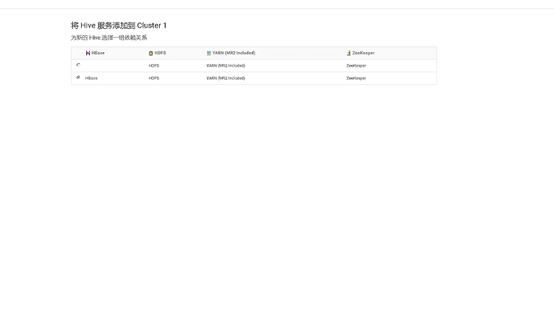

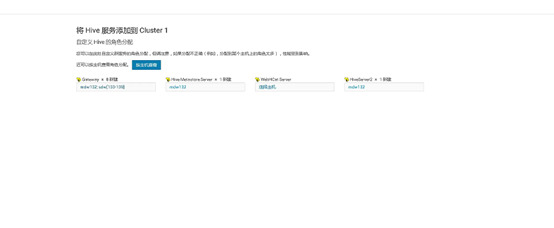

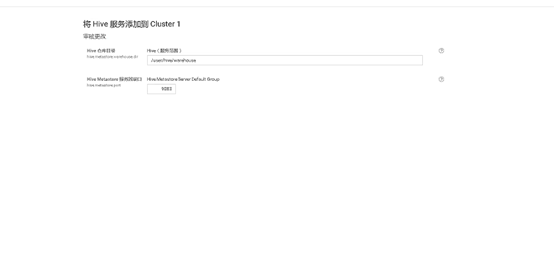

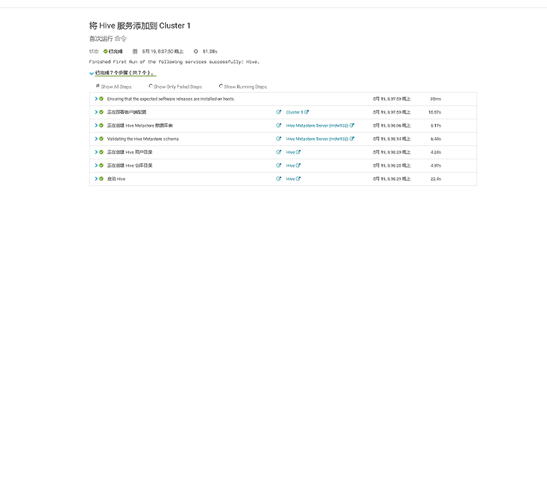

添加hive服务

先做一个软连接:

ln -s /opt/cm-5.16.1/share/cmf/lib/mysql-connector-java-5.1.36-bin.jar /usr/share/java/mysql-connector-java.jar

ps:

如果没有/usr/share/java文件夹,需要mkdir /usr/share/java

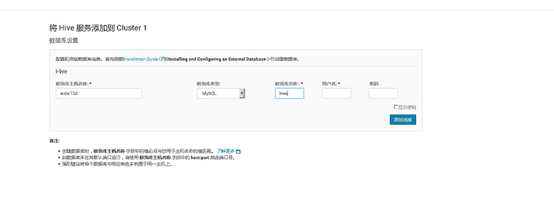

连接mysql数据库执行

[root@mdw96 tools]# mysql -u root -p

Enter password: Sdcmcc@139.com

//在mysql创建hive数据库

create database hive;

use hive;

装hive

基本默认安装 路径选一个大点的盘(文件夹)

安装成功后连接mysql数据库

mysql -u root -p

Sdcmcc@139.com

修改hive数据库配置:

use hive;

alter table SERDE_PARAMS modify column PARAM_VALUE varchar(60000);

desc SERDE_PARAMS;

flush privileges;

show tables;

修改mysql配置

[root@mdw96 java]# vi /usr/my.cnf

# For advice on how to change settings please see

# http://dev.mysql.com/doc/refman/5.6/en/server-configuration-defaults.html

[mysqld]

//修改的内容

port = 3306

socket = /var/lib/mysql/mysql.sock

character-set-server=utf8

max_connections = 2000

max_connect_errors = 2000

# Remove leading # and set to the amount of RAM for the most important data

# cache in MySQL. Start at 70% of total RAM for dedicated server, else 10%.

# innodb_buffer_pool_size = 128M

# Remove leading # to turn on a very important data integrity option: logging

# changes to the binary log between backups.

# log_bin

# These are commonly set, remove the # and set as required.

# basedir = .....

# datadir = .....

# port = .....

# server_id = .....

# socket = .....

# Remove leading # to set options mainly useful for reporting servers.

# The server defaults are faster for transactions and fast SELECTs.

# Adjust sizes as needed, experiment to find the optimal values.

# join_buffer_size = 128M

# sort_buffer_size = 2M

# read_rnd_buffer_size = 2M

sql_mode=NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES

保存文件 重启mysql

systemctl restart mysql

最后一步:

切换到GP主服务器—mdw96:

# 切换到gpadmin用户

su gpadmin

#执行:(标黄请注意,如第一个标黄表示CDH是5.X的cdh5,6.X则是cdh6,同理第二个标黄也是版本号)

gpconfig -c gp_hadoop_target_version -v “cdh5”

gpconfig -c gp_hadoop_home -v “‘/opt/cloudera/parcels/CDH-5.16.1-1.cdh5.16.1.p0.3 /lib/hadoop/client'”

最最最后就是性能测试

hive

create table date_test1(id int,name string);

insert into date_test1 values(1,'wjian');

hdfs dfs -ls hdfs://10.214.138.132:8020/user/hive/warehouse/date_test1/

drop EXTERNAL table hdfs_test;

create EXTERNAL table hdfs_test(id int,name varchar(32))

location ('gphdfs://10.214.138.132:8020/user/hive/warehouse/date_test1/*')

format 'TEXT' (DELIMITER '\001')

;

select * from hdfs_test

hdfs dfs -ls hdfs://10.227.17.96:8020/data1/hive/warehouse/date_test1/

hdfs dfs -ls hdfs:// 10.227.17.96:8020/data1/hive/warehouse/pm_lte_eutrancellfdd_hour_inner/

drop EXTERNAL table hdfs_test;

create EXTERNAL table hdfs_test(id int,name varchar(32))

location ('gphdfs://10.227.17.96:8020/data1/hive/warehouse/date_test1/*')

format 'TEXT' (DELIMITER '\001')

;

select * from hdfs_test

hdfs dfs -ls hdfs://10.224.230.171:8020/home/hive/warehouse/date_test1/

drop EXTERNAL table hdfs_test;

create EXTERNAL table hdfs_test(id int,name varchar(32))

location ('gphdfs://10.224.230.171:8020/home/hive/warehouse/date_test1/*')

format 'TEXT' (DELIMITER '\001');

select * from hdfs_test

遇到问题:

1.HDFS dfs.permissions 去掉对勾,不然需要验证权限才能进行插入…

2.如果是旧机器需要看看是否已经安装了cloudera和CDH

https://blog.csdn.net/wulantian/article/details/42706777/ 这是CDH5的卸载步骤

删除:

卸载挂载:

umount /opt/cm-5.16.1/run/cloudera-scm-agent/process

#执行查找一下cloudera文件

find / -name cloudera*

#查看 /var/lib文件夹遗留的文件

# cd /var/lib

rm -rf cloudera*

rm -rf hadoop*

rm -rf hbase

rm -rf hive

rm -rf zookeeper

rm -rf solr

rm -rf oozie

rm -rf sqoop*

#查看 /var/run文件夹遗留的文件

cd /var/run

rm -rf cloudera*

rm -rf hadoop*

rm -rf hdfs*

rm -rf hive

rm -rf hadoop*

#查看 /opt文件夹遗留的文件

cd /opt/

rm -rf clouder*

#查看 /var/log文件夹遗留的文件

cd /var/log

rm -rf hadoop*

rm -rf zookeeper*

rm -rf hbase*

rm -rf hive*

rm -rf hdfs

rm -rf mapred

rm -rf yarn

rm -rf sqoop*

rm -rf oozie

#查看 /etc文件夹遗留的文件

cd /etc/

rm -rf hadoop*

rm -rf zookeeper*

rm -rf hive*

rm -rf hue

rm -rf impala

rm -rf sqoop*

rm -rf oozie

rm -rf hbase*

rm -rf hcatalog

#查看 /usr/bin文件夹遗留的文件

cd /usr/bin/

rm -rf hadoop*

rm -rf zookeeper*

rm -rf hbase*

rm -rf hive*

rm -rf hdfs

rm -rf mapred

rm -rf yarn

rm -rf sqoop*

rm -rf oozie

#查找是否存在遗留未删除的

find / -name hadoop*

默认知打开程序的信息和配置 :

/etc/alternatives

rm -rf hadoop*

rm -rf zookeeper*

rm -rf hbase*

rm -rf hive*

rm -rf hdfs

rm -rf mapred

rm -rf yarn

rm -rf sqoop*

rm -rf oozie

/var/lib/alternatives

rm -rf hadoop*

rm -rf zookeeper*

rm -rf hbase*

rm -rf hive*

rm -rf hdfs

rm -rf mapred

rm -rf yarn

rm -rf sqoop*

rm -rf oozi

find / -name hadoop*

删除账号

userdel -r cloudera-scm

进入mysql数据库 删除cm数据库

3.ProtocolError: <ProtocolError for 127.0.0.1/RPC2: 401 Unauthor.

安装cloudera-manager-agent报错

查看/var/log/cloudera-scm-agent.log

报错

Traceback (most recent call last):

File "/usr/lib64/cmf/agent/build/env/lib/python2.7/site-packages/cmf-5.16.1-py2.7.egg/cmf/agent.py", line 2270, in connect_to_new_superviso

self.get_supervisor_process_info()

File "/usr/lib64/cmf/agent/build/env/lib/python2.7/site-packages/cmf-5.16.1-py2.7.egg/cmf/agent.py", line 2290, in get_supervisor_process_i

self.identifier = self.supervisor_client.supervisor.getIdentification()

File "/usr/lib64/python2.7/xmlrpclib.py", line 1233, in __call__

return self.__send(self.__name, args)

File "/usr/lib64/python2.7/xmlrpclib.py", line 1587, in __request

verbose=self.__verbose

File "/usr/lib64/cmf/agent/build/env/lib/python2.7/site-packages/supervisor-3.0-py2.7.egg/supervisor/xmlrpc.py", line 470, in request

'' )

ProtocolError: <ProtocolError for 127.0.0.1/RPC2: 401 Unauthorized>

[01/Dec/2018 20:23:29 +0000] 30267 MainThread agent ERROR Failed to connect to newly launched supervisor. Agent will exit

[01/Dec/2018 20:23:29 +0000] 30267 MainThread agent INFO Stopping agent...

[01/Dec/2018 20:23:29 +0000] 30267 MainThread agent INFO No extant cgroups; unmounting any cgroup roots

[01/Dec/2018 20:23:29 +0000] 30267 Dummy-1 daemonize WARNING Stopping daemon.

解决办法

[root@dip001 ~]# ps -ef | grep supervisord

root 24491 1 0 11月30 ? 00:00:34 /usr/lib64/cmf/agent/build/env/bin/python /usr/lib64/cmf/agent/build/env/bin/supervisord

root 30335 30312 0 20:27 pts/0 00:00:00 grep --color=auto supervisord

[root@dip001 ~]# kill -9 24491

[root@dip001 ~]# ps -ef | grep supervisord

root 30338 30312 0 20:27 pts/0 00:00:00 grep --color=auto supervisord

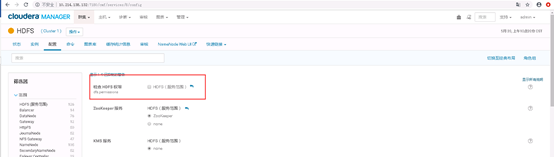

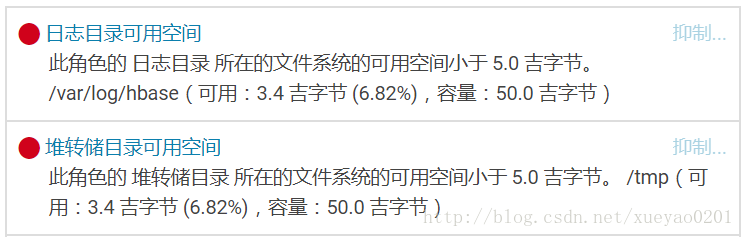

4.Cloudera Manager集群报警,堆转储目录/tmp 或日志目录/var/log 可用空间小于 5.0 吉字节

在Cloudera Manager上收到报警信息如下:

解决办法

1.检查目标主机的磁盘使用情况

登陆到目标主机后,切换路径到根目录,运行 df -h

得知是日志盘文件盘过小,更改选择比较大一些的文件挂载

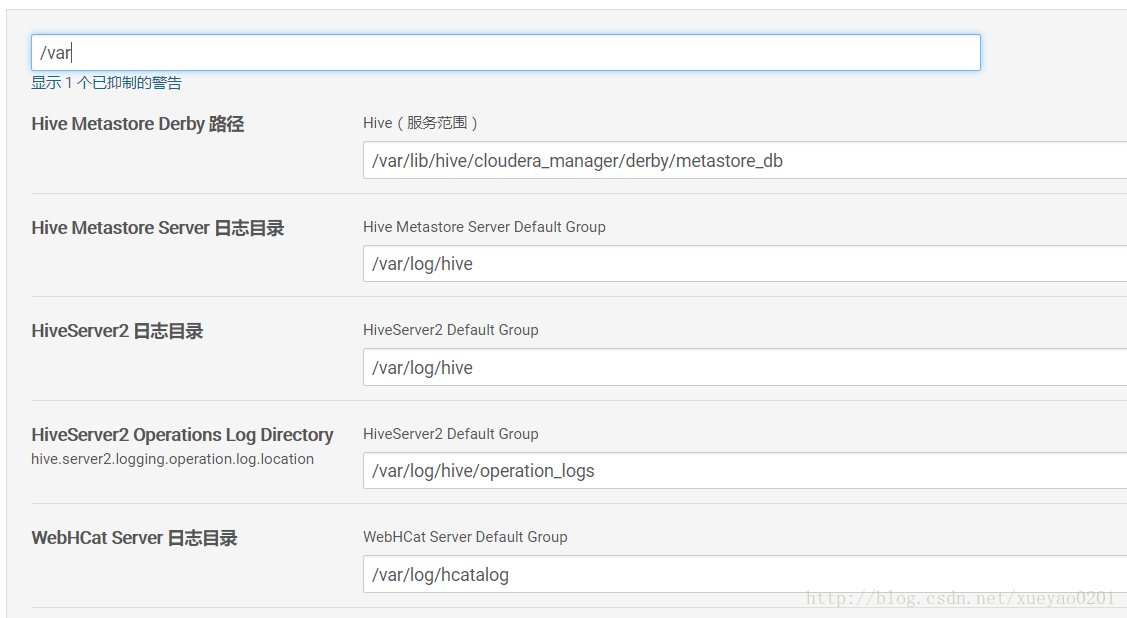

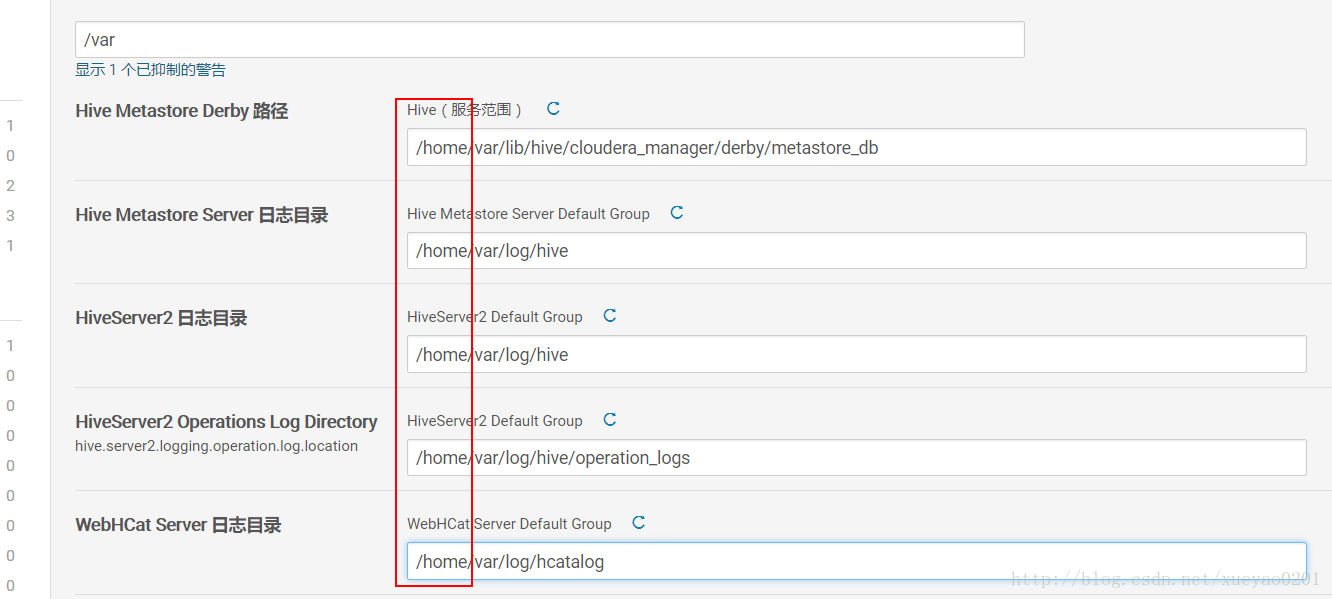

修改集群中hive、yarn、spark的配置,搜索包含/var、/tmp或/yarn的配置项,以下以Hive为例:

在上述路径前添加/home(因为/home所在的磁盘空间较大)

保存配置重启集群即可。